使用混合密度網路逼近非函數映射

作者: lukewood

建立日期 2023/07/15

上次修改日期 2023/07/15

說明: 使用混合密度網路逼近非一對一映射。

逼近非函數

神經網路是通用的函數逼近器。關鍵字:函數!雖然神經網路是強大的函數逼近器,但它們無法逼近非函數。關於函數,有一個重要的限制要記住 - 它們有一個輸入,一個輸出!當訓練集對於單個 X 有多個 Y 值時,神經網路會受到很大的影響。

在本指南中,我將向您展示如何逼近非函數類別,該類別包含從 x -> y 的映射,使得給定 x 可能存在多個 y。我們將使用一種稱為「混合密度網路」的神經網路類別。

我將使用新的 多後端 Keras V3 來建構我的混合密度網路。Keras 團隊在這個專案上做得非常出色 - 能夠在一行程式碼中交換框架真是太棒了。

一些壞消息:我在本指南中使用了 TensorFlow Probability... 所以它實際上僅適用於 TensorFlow 和 JAX 後端。

總之,讓我們從安裝依賴項和整理導入開始

!pip install -q --upgrade jax tensorflow-probability[jax] keras

import os

os.environ["KERAS_BACKEND"] = "jax"

import numpy as np

import matplotlib.pyplot as plt

import keras

from keras import callbacks, layers, ops

from tensorflow_probability.substrates.jax import distributions as tfd

Using JAX backend

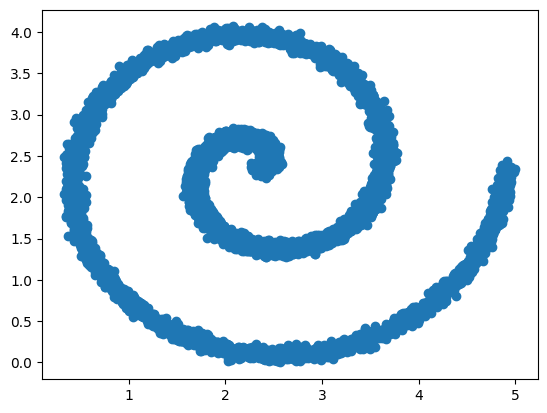

接下來,讓我們產生一個雜訊螺旋,我們將嘗試逼近它。我在下面定義了一些函數來執行此操作

def normalize(x):

return (x - np.min(x)) / (np.max(x) - np.min(x))

def create_noisy_spiral(n, jitter_std=0.2, revolutions=2):

angle = np.random.uniform(0, 2 * np.pi * revolutions, [n])

r = angle

x = r * np.cos(angle)

y = r * np.sin(angle)

result = np.stack([x, y], axis=1)

result = result + np.random.normal(scale=jitter_std, size=[n, 2])

result = 5 * normalize(result)

return result

接下來,讓我們多次調用此函數以建構樣本資料集

xy = create_noisy_spiral(10000)

x, y = xy[:, 0:1], xy[:, 1:]

plt.scatter(x, y)

plt.show()

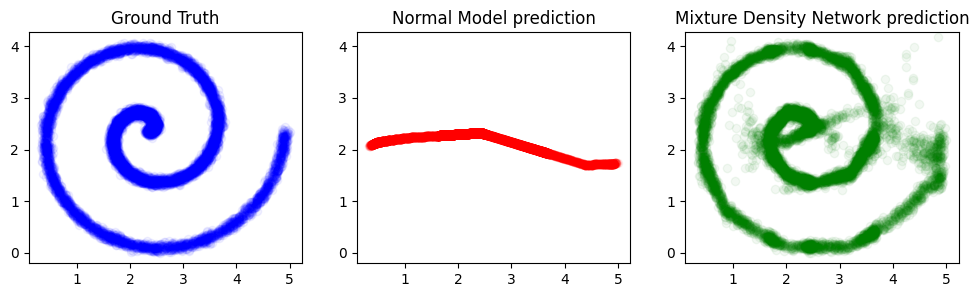

正如您所看到的,對於給定的 X,Y 有多個可能的值。普通神經網路只會學習這些點在幾何空間中的平均值。

我們可以透過一個簡單的線性模型快速展示這一點

N_HIDDEN = 128

model = keras.Sequential(

[

layers.Dense(N_HIDDEN, activation="relu"),

layers.Dense(N_HIDDEN, activation="relu"),

layers.Dense(1),

]

)

讓我們使用均方誤差以及 adam 優化器。這些往往是合理的原型選擇

model.compile(optimizer="adam", loss="mse")

我們可以非常容易地擬合這個模型

model.fit(

x,

y,

epochs=300,

batch_size=128,

validation_split=0.15,

callbacks=[callbacks.EarlyStopping(monitor="val_loss", patience=10)],

)

Epoch 1/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 3s 8ms/step - loss: 2.6971 - val_loss: 1.6366

Epoch 2/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.5672 - val_loss: 1.2341

Epoch 3/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.1751 - val_loss: 1.0113

Epoch 4/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0322 - val_loss: 1.0108

Epoch 5/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0625 - val_loss: 1.0212

Epoch 6/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0290 - val_loss: 1.0022

Epoch 7/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0469 - val_loss: 1.0033

Epoch 8/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0247 - val_loss: 1.0011

Epoch 9/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0313 - val_loss: 0.9997

Epoch 10/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0252 - val_loss: 0.9995

Epoch 11/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0369 - val_loss: 1.0015

Epoch 12/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0203 - val_loss: 0.9958

Epoch 13/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0305 - val_loss: 0.9960

Epoch 14/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0283 - val_loss: 1.0081

Epoch 15/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0331 - val_loss: 0.9943

Epoch 16/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 1.0244 - val_loss: 1.0021

Epoch 17/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0496 - val_loss: 1.0077

Epoch 18/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0367 - val_loss: 0.9940

Epoch 19/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0201 - val_loss: 0.9927

Epoch 20/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0501 - val_loss: 1.0133

Epoch 21/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0098 - val_loss: 0.9980

Epoch 22/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0195 - val_loss: 0.9907

Epoch 23/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0196 - val_loss: 0.9899

Epoch 24/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0170 - val_loss: 1.0033

Epoch 25/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0169 - val_loss: 0.9963

Epoch 26/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0141 - val_loss: 0.9895

Epoch 27/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0367 - val_loss: 0.9916

Epoch 28/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0301 - val_loss: 0.9991

Epoch 29/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0097 - val_loss: 1.0004

Epoch 30/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0415 - val_loss: 1.0062

Epoch 31/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0186 - val_loss: 0.9888

Epoch 32/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0230 - val_loss: 0.9910

Epoch 33/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0217 - val_loss: 0.9910

Epoch 34/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0180 - val_loss: 0.9945

Epoch 35/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0329 - val_loss: 0.9963

Epoch 36/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0190 - val_loss: 0.9912

Epoch 37/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0341 - val_loss: 0.9894

Epoch 38/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0100 - val_loss: 0.9920

Epoch 39/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0097 - val_loss: 0.9899

Epoch 40/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0216 - val_loss: 0.9948

Epoch 41/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0115 - val_loss: 0.9923

<keras_core.src.callbacks.history.History at 0x12e0b4dd0>

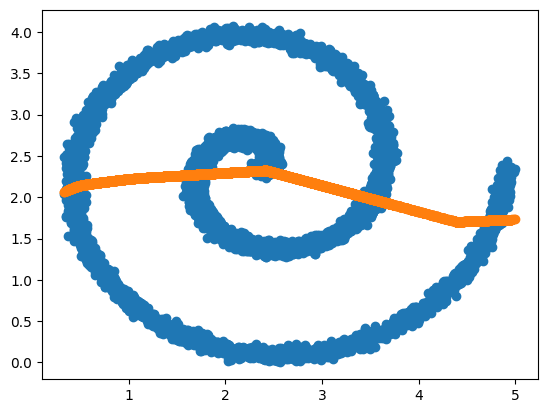

讓我們看看結果

y_pred = model.predict(x)

313/313 ━━━━━━━━━━━━━━━━━━━━ 0s 851us/step

正如預期的那樣,該模型學習了給定 x 的所有點在 y 中的幾何平均值。

plt.scatter(x, y)

plt.scatter(x, y_pred)

plt.show()

混合密度網路

混合密度網路可以緩解這個問題。混合密度是一類複雜的密度,可以用更簡單的密度表示。它們實際上是大量機率分布的總和。混合密度網路學習根據給定的訓練集參數化混合密度分布。

作為從業者,您只需要知道的是,混合密度網路解決了給定 X 對應多個 Y 值的問題。我希望為您的工具箱新增一個工具 - 但我不打算在本指南中正式解釋混合密度網路的推導。最重要的是要知道混合密度網路學習參數化混合密度分布。這是透過計算相對於提供的 y_i 標籤以及相應 x_i 的預測分布的特殊損失來完成的。此損失函數透過計算 y_i 從預測的混合分布中抽取的機率來運作。

讓我們實作一個混合密度網路。下面,根據舊的 Keras 函式庫 Keras 混合密度網路層 定義了大量輔助函數。

我已經調整了程式碼以與 Keras 核心一起使用。

讓我們開始編寫混合密度網路!首先,我們需要一個特殊的激活函數:ELU 加上一個很小的 epsilon。這有助於防止 ELU 輸出 0,這會在混合密度網路損失評估中導致 NaN。

def elu_plus_one_plus_epsilon(x):

return keras.activations.elu(x) + 1 + keras.backend.epsilon()

接下來,讓我們實際定義一個 MixtureDensity 層,該層輸出從學習到的混合分布中採樣所需的所有值

class MixtureDensityOutput(layers.Layer):

def __init__(self, output_dimension, num_mixtures, **kwargs):

super().__init__(**kwargs)

self.output_dim = output_dimension

self.num_mix = num_mixtures

self.mdn_mus = layers.Dense(

self.num_mix * self.output_dim, name="mdn_mus"

) # mix*output vals, no activation

self.mdn_sigmas = layers.Dense(

self.num_mix * self.output_dim,

activation=elu_plus_one_plus_epsilon,

name="mdn_sigmas",

) # mix*output vals exp activation

self.mdn_pi = layers.Dense(self.num_mix, name="mdn_pi") # mix vals, logits

def build(self, input_shape):

self.mdn_mus.build(input_shape)

self.mdn_sigmas.build(input_shape)

self.mdn_pi.build(input_shape)

super().build(input_shape)

@property

def trainable_weights(self):

return (

self.mdn_mus.trainable_weights

+ self.mdn_sigmas.trainable_weights

+ self.mdn_pi.trainable_weights

)

@property

def non_trainable_weights(self):

return (

self.mdn_mus.non_trainable_weights

+ self.mdn_sigmas.non_trainable_weights

+ self.mdn_pi.non_trainable_weights

)

def call(self, x, mask=None):

return layers.concatenate(

[self.mdn_mus(x), self.mdn_sigmas(x), self.mdn_pi(x)], name="mdn_outputs"

)

讓我們使用我們的新層建構一個混合密度網路

OUTPUT_DIMS = 1

N_MIXES = 20

mdn_network = keras.Sequential(

[

layers.Dense(N_HIDDEN, activation="relu"),

layers.Dense(N_HIDDEN, activation="relu"),

MixtureDensityOutput(OUTPUT_DIMS, N_MIXES),

]

)

接下來,讓我們實作一個自訂損失函數,以根據真實值和我們的預期輸出訓練混合密度網路層

def get_mixture_loss_func(output_dim, num_mixes):

def mdn_loss_func(y_true, y_pred):

# Reshape inputs in case this is used in a TimeDistributed layer

y_pred = ops.reshape(y_pred, [-1, (2 * num_mixes * output_dim) + num_mixes])

y_true = ops.reshape(y_true, [-1, output_dim])

# Split the inputs into parameters

out_mu, out_sigma, out_pi = ops.split(y_pred, 3, axis=-1)

# Construct the mixture models

cat = tfd.Categorical(logits=out_pi)

mus = ops.split(out_mu, num_mixes, axis=1)

sigs = ops.split(out_sigma, num_mixes, axis=1)

coll = [

tfd.MultivariateNormalDiag(loc=loc, scale_diag=scale)

for loc, scale in zip(mus, sigs)

]

mixture = tfd.Mixture(cat=cat, components=coll)

loss = mixture.log_prob(y_true)

loss = ops.negative(loss)

loss = ops.mean(loss)

return loss

return mdn_loss_func

mdn_network.compile(loss=get_mixture_loss_func(OUTPUT_DIMS, N_MIXES), optimizer="adam")

最後,我們可以像任何其他 Keras 模型一樣呼叫 model.fit()。

mdn_network.fit(

x,

y,

epochs=300,

batch_size=128,

validation_split=0.15,

callbacks=[

callbacks.EarlyStopping(monitor="loss", patience=10, restore_best_weights=True),

callbacks.ReduceLROnPlateau(monitor="loss", patience=5),

],

)

Epoch 1/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 20s 89ms/step - loss: 2.5088 - val_loss: 1.6384 - learning_rate: 0.0010

Epoch 2/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.5954 - val_loss: 1.4872 - learning_rate: 0.0010

Epoch 3/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.4818 - val_loss: 1.4026 - learning_rate: 0.0010

Epoch 4/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.3818 - val_loss: 1.3327 - learning_rate: 0.0010

Epoch 5/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.3478 - val_loss: 1.3034 - learning_rate: 0.0010

Epoch 6/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 1.3045 - val_loss: 1.2684 - learning_rate: 0.0010

Epoch 7/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 1.2836 - val_loss: 1.2381 - learning_rate: 0.0010

Epoch 8/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 1.2582 - val_loss: 1.2047 - learning_rate: 0.0010

Epoch 9/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.2212 - val_loss: 1.1915 - learning_rate: 0.0010

Epoch 10/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.1907 - val_loss: 1.1903 - learning_rate: 0.0010

Epoch 11/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.1456 - val_loss: 1.0221 - learning_rate: 0.0010

Epoch 12/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 1.0075 - val_loss: 0.9356 - learning_rate: 0.0010

Epoch 13/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.9413 - val_loss: 0.8409 - learning_rate: 0.0010

Epoch 14/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 0.8646 - val_loss: 0.8717 - learning_rate: 0.0010

Epoch 15/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 0.8053 - val_loss: 0.8080 - learning_rate: 0.0010

Epoch 16/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.7568 - val_loss: 0.6381 - learning_rate: 0.0010

Epoch 17/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.6638 - val_loss: 0.6175 - learning_rate: 0.0010

Epoch 18/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.5893 - val_loss: 0.5387 - learning_rate: 0.0010

Epoch 19/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.5835 - val_loss: 0.5449 - learning_rate: 0.0010

Epoch 20/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step - loss: 0.5137 - val_loss: 0.4536 - learning_rate: 0.0010

Epoch 21/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 0.4808 - val_loss: 0.4779 - learning_rate: 0.0010

Epoch 22/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.4592 - val_loss: 0.4359 - learning_rate: 0.0010

Epoch 23/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.4303 - val_loss: 0.4768 - learning_rate: 0.0010

Epoch 24/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.4505 - val_loss: 0.4084 - learning_rate: 0.0010

Epoch 25/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.4033 - val_loss: 0.3484 - learning_rate: 0.0010

Epoch 26/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.3696 - val_loss: 0.4844 - learning_rate: 0.0010

Epoch 27/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.3868 - val_loss: 0.3406 - learning_rate: 0.0010

Epoch 28/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.3214 - val_loss: 0.2739 - learning_rate: 0.0010

Epoch 29/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.3154 - val_loss: 0.3286 - learning_rate: 0.0010

Epoch 30/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.2930 - val_loss: 0.2263 - learning_rate: 0.0010

Epoch 31/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.2946 - val_loss: 0.2927 - learning_rate: 0.0010

Epoch 32/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.2739 - val_loss: 0.2026 - learning_rate: 0.0010

Epoch 33/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.2454 - val_loss: 0.2451 - learning_rate: 0.0010

Epoch 34/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.2146 - val_loss: 0.1722 - learning_rate: 0.0010

Epoch 35/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.2041 - val_loss: 0.2774 - learning_rate: 0.0010

Epoch 36/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.2020 - val_loss: 0.1257 - learning_rate: 0.0010

Epoch 37/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.1614 - val_loss: 0.1128 - learning_rate: 0.0010

Epoch 38/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.1676 - val_loss: 0.1908 - learning_rate: 0.0010

Epoch 39/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 0.1511 - val_loss: 0.1045 - learning_rate: 0.0010

Epoch 40/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: 0.1061 - val_loss: 0.1321 - learning_rate: 0.0010

Epoch 41/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.1170 - val_loss: 0.0879 - learning_rate: 0.0010

Epoch 42/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.1045 - val_loss: 0.0307 - learning_rate: 0.0010

Epoch 43/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.1066 - val_loss: 0.0637 - learning_rate: 0.0010

Epoch 44/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0960 - val_loss: 0.0304 - learning_rate: 0.0010

Epoch 45/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0747 - val_loss: 0.0211 - learning_rate: 0.0010

Epoch 46/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0733 - val_loss: -0.0155 - learning_rate: 0.0010

Epoch 47/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0339 - val_loss: 0.0079 - learning_rate: 0.0010

Epoch 48/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0597 - val_loss: 0.0223 - learning_rate: 0.0010

Epoch 49/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0370 - val_loss: 0.0549 - learning_rate: 0.0010

Epoch 50/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0343 - val_loss: 0.0031 - learning_rate: 0.0010

Epoch 51/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0132 - val_loss: -0.0304 - learning_rate: 0.0010

Epoch 52/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0326 - val_loss: 0.0584 - learning_rate: 0.0010

Epoch 53/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0512 - val_loss: -0.0166 - learning_rate: 0.0010

Epoch 54/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0210 - val_loss: -0.0433 - learning_rate: 0.0010

Epoch 55/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0261 - val_loss: 0.0317 - learning_rate: 0.0010

Epoch 56/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0185 - val_loss: -0.0210 - learning_rate: 0.0010

Epoch 57/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0021 - val_loss: -0.0218 - learning_rate: 0.0010

Epoch 58/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0100 - val_loss: -0.0488 - learning_rate: 0.0010

Epoch 59/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0126 - val_loss: -0.0504 - learning_rate: 0.0010

Epoch 60/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0278 - val_loss: -0.0622 - learning_rate: 0.0010

Epoch 61/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0180 - val_loss: -0.0756 - learning_rate: 0.0010

Epoch 62/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: -0.0198 - val_loss: -0.0427 - learning_rate: 0.0010

Epoch 63/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0129 - val_loss: -0.0483 - learning_rate: 0.0010

Epoch 64/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0221 - val_loss: -0.0379 - learning_rate: 0.0010

Epoch 65/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: -0.0177 - val_loss: -0.0626 - learning_rate: 0.0010

Epoch 66/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0045 - val_loss: -0.0148 - learning_rate: 0.0010

Epoch 67/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0045 - val_loss: -0.0570 - learning_rate: 0.0010

Epoch 68/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0304 - val_loss: -0.0062 - learning_rate: 0.0010

Epoch 69/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.0053 - val_loss: -0.0553 - learning_rate: 0.0010

Epoch 70/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0364 - val_loss: -0.1112 - learning_rate: 0.0010

Epoch 71/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0017 - val_loss: -0.0865 - learning_rate: 0.0010

Epoch 72/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0082 - val_loss: -0.1180 - learning_rate: 0.0010

Epoch 73/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0501 - val_loss: -0.1028 - learning_rate: 0.0010

Epoch 74/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0452 - val_loss: -0.0381 - learning_rate: 0.0010

Epoch 75/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0397 - val_loss: -0.0517 - learning_rate: 0.0010

Epoch 76/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0317 - val_loss: -0.1144 - learning_rate: 0.0010

Epoch 77/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0400 - val_loss: -0.1283 - learning_rate: 0.0010

Epoch 78/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0756 - val_loss: -0.0749 - learning_rate: 0.0010

Epoch 79/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0459 - val_loss: -0.1229 - learning_rate: 0.0010

Epoch 80/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0485 - val_loss: -0.0896 - learning_rate: 0.0010

Epoch 81/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: -0.0351 - val_loss: -0.1037 - learning_rate: 0.0010

Epoch 82/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0617 - val_loss: -0.0949 - learning_rate: 0.0010

Epoch 83/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0614 - val_loss: -0.1044 - learning_rate: 0.0010

Epoch 84/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0650 - val_loss: -0.1128 - learning_rate: 0.0010

Epoch 85/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0710 - val_loss: -0.1236 - learning_rate: 0.0010

Epoch 86/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0504 - val_loss: -0.0149 - learning_rate: 0.0010

Epoch 87/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0561 - val_loss: -0.1095 - learning_rate: 0.0010

Epoch 88/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0527 - val_loss: -0.0929 - learning_rate: 0.0010

Epoch 89/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0704 - val_loss: -0.1062 - learning_rate: 0.0010

Epoch 90/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.0386 - val_loss: -0.1433 - learning_rate: 0.0010

Epoch 91/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1129 - val_loss: -0.1698 - learning_rate: 1.0000e-04

Epoch 92/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1210 - val_loss: -0.1696 - learning_rate: 1.0000e-04

Epoch 93/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1315 - val_loss: -0.1663 - learning_rate: 1.0000e-04

Epoch 94/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1207 - val_loss: -0.1696 - learning_rate: 1.0000e-04

Epoch 95/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1208 - val_loss: -0.1606 - learning_rate: 1.0000e-04

Epoch 96/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1157 - val_loss: -0.1728 - learning_rate: 1.0000e-04

Epoch 97/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1367 - val_loss: -0.1691 - learning_rate: 1.0000e-04

Epoch 98/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1237 - val_loss: -0.1740 - learning_rate: 1.0000e-04

Epoch 99/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1271 - val_loss: -0.1593 - learning_rate: 1.0000e-04

Epoch 100/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1358 - val_loss: -0.1738 - learning_rate: 1.0000e-04

Epoch 101/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1260 - val_loss: -0.1669 - learning_rate: 1.0000e-04

Epoch 102/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1184 - val_loss: -0.1660 - learning_rate: 1.0000e-04

Epoch 103/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1221 - val_loss: -0.1740 - learning_rate: 1.0000e-04

Epoch 104/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1207 - val_loss: -0.1498 - learning_rate: 1.0000e-04

Epoch 105/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1210 - val_loss: -0.1695 - learning_rate: 1.0000e-04

Epoch 106/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1264 - val_loss: -0.1477 - learning_rate: 1.0000e-04

Epoch 107/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1217 - val_loss: -0.1717 - learning_rate: 1.0000e-04

Epoch 108/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1182 - val_loss: -0.1748 - learning_rate: 1.0000e-05

Epoch 109/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1394 - val_loss: -0.1757 - learning_rate: 1.0000e-05

Epoch 110/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1363 - val_loss: -0.1762 - learning_rate: 1.0000e-05

Epoch 111/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1292 - val_loss: -0.1765 - learning_rate: 1.0000e-05

Epoch 112/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1330 - val_loss: -0.1737 - learning_rate: 1.0000e-05

Epoch 113/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1341 - val_loss: -0.1769 - learning_rate: 1.0000e-05

Epoch 114/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1318 - val_loss: -0.1771 - learning_rate: 1.0000e-05

Epoch 115/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1285 - val_loss: -0.1756 - learning_rate: 1.0000e-05

Epoch 116/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1211 - val_loss: -0.1764 - learning_rate: 1.0000e-05

Epoch 117/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1434 - val_loss: -0.1755 - learning_rate: 1.0000e-05

Epoch 118/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 1s 2ms/step - loss: -0.1375 - val_loss: -0.1757 - learning_rate: 1.0000e-05

Epoch 119/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1407 - val_loss: -0.1740 - learning_rate: 1.0000e-05

Epoch 120/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1406 - val_loss: -0.1754 - learning_rate: 1.0000e-06

Epoch 121/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1258 - val_loss: -0.1761 - learning_rate: 1.0000e-06

Epoch 122/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1384 - val_loss: -0.1762 - learning_rate: 1.0000e-06

Epoch 123/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1522 - val_loss: -0.1764 - learning_rate: 1.0000e-06

Epoch 124/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1310 - val_loss: -0.1763 - learning_rate: 1.0000e-06

Epoch 125/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1434 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 126/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1329 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 127/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1392 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 128/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1300 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 129/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: -0.1347 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 130/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: -0.1200 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 131/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: -0.1415 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 132/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1270 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 133/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1329 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 134/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1265 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 135/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1329 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 136/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1429 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 137/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1394 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 138/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1315 - val_loss: -0.1763 - learning_rate: 1.0000e-07

Epoch 139/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1253 - val_loss: -0.1763 - learning_rate: 1.0000e-08

Epoch 140/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1346 - val_loss: -0.1763 - learning_rate: 1.0000e-08

Epoch 141/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1418 - val_loss: -0.1763 - learning_rate: 1.0000e-08

Epoch 142/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: -0.1279 - val_loss: -0.1763 - learning_rate: 1.0000e-08

Epoch 143/300

67/67 ━━━━━━━━━━━━━━━━━━━━ 0s 3ms/step - loss: -0.1224 - val_loss: -0.1763 - learning_rate: 1.0000e-08

<keras_core.src.callbacks.history.History at 0x148c20890>

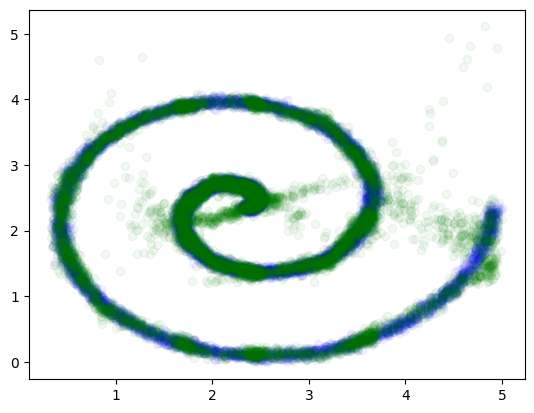

讓我們進行一些預測!

y_pred_mixture = mdn_network.predict(x)

print(y_pred_mixture.shape)

313/313 ━━━━━━━━━━━━━━━━━━━━ 0s 811us/step

(10000, 60)

MDN 不會輸出單個值;相反,它會輸出值來參數化混合分布。為了視覺化這些輸出,讓我們從分布中採樣。

請注意,採樣是一個有損過程。如果您想保留所有資訊作為更大的潛在表示的一部分(即用於下游處理),我建議您只需保留分布參數即可。

def split_mixture_params(params, output_dim, num_mixes):

mus = params[: num_mixes * output_dim]

sigs = params[num_mixes * output_dim : 2 * num_mixes * output_dim]

pi_logits = params[-num_mixes:]

return mus, sigs, pi_logits

def softmax(w, t=1.0):

e = np.array(w) / t # adjust temperature

e -= e.max() # subtract max to protect from exploding exp values.

e = np.exp(e)

dist = e / np.sum(e)

return dist

def sample_from_categorical(dist):

r = np.random.rand(1) # uniform random number in [0,1]

accumulate = 0

for i in range(0, dist.size):

accumulate += dist[i]

if accumulate >= r:

return i

print("Error sampling categorical model.")

return -1

def sample_from_output(params, output_dim, num_mixes, temp=1.0, sigma_temp=1.0):

mus, sigs, pi_logits = split_mixture_params(params, output_dim, num_mixes)

pis = softmax(pi_logits, t=temp)

m = sample_from_categorical(pis)

# Alternative way to sample from categorical:

# m = np.random.choice(range(len(pis)), p=pis)

mus_vector = mus[m * output_dim : (m + 1) * output_dim]

sig_vector = sigs[m * output_dim : (m + 1) * output_dim]

scale_matrix = np.identity(output_dim) * sig_vector # scale matrix from diag

cov_matrix = np.matmul(scale_matrix, scale_matrix.T) # cov is scale squared.

cov_matrix = cov_matrix * sigma_temp # adjust for sigma temperature

sample = np.random.multivariate_normal(mus_vector, cov_matrix, 1)

return sample

接下來,讓我們使用我們的採樣函數

# Sample from the predicted distributions

y_samples = np.apply_along_axis(

sample_from_output, 1, y_pred_mixture, 1, N_MIXES, temp=1.0

)

最後,我們可以視覺化我們的網路輸出

plt.scatter(x, y, alpha=0.05, color="blue", label="Ground Truth")

plt.scatter(

x,

y_samples[:, :, 0],

color="green",

alpha=0.05,

label="Mixture Density Network prediction",

)

plt.show()

太棒了。很高興看到它

結論

神經網路是通用的函數逼近器 - 但它們只能逼近函數。混合密度網路可以使用一些巧妙的機率技巧來逼近任意 x->y 映射。

如需更多 tensorflow_probability 範例,請從這裡開始。

再放一張漂亮的圖表

fig, axs = plt.subplots(1, 3)

fig.set_figheight(3)

fig.set_figwidth(12)

axs[0].set_title("Ground Truth")

axs[0].scatter(x, y, alpha=0.05, color="blue")

xlim = axs[0].get_xlim()

ylim = axs[0].get_ylim()

axs[1].set_title("Normal Model prediction")

axs[1].scatter(x, y_pred, alpha=0.05, color="red")

axs[1].set_xlim(xlim)

axs[1].set_ylim(ylim)

axs[2].scatter(

x,

y_samples[:, :, 0],

color="green",

alpha=0.05,

label="Mixture Density Network prediction",

)

axs[2].set_title("Mixture Density Network prediction")

axs[2].set_xlim(xlim)

axs[2].set_ylim(ylim)

plt.show()