天氣預測時間序列預測

作者: Prabhanshu Attri、Yashika Sharma、Kristi Takach、Falak Shah

建立日期 2020/06/23

上次修改日期 2023/11/22

說明: 此筆記本示範如何使用 LSTM 模型進行時間序列預測。

設定

import pandas as pd

import matplotlib.pyplot as plt

import keras

氣候資料時間序列

我們將使用馬克斯·普朗克生物地球化學研究所記錄的耶拿氣候資料集。該資料集包含 14 個特徵,例如溫度、壓力、濕度等,每 10 分鐘記錄一次。

地點:德國耶拿,馬克斯·普朗克生物地球化學研究所氣象站

考量時間範圍:2009 年 1 月 10 日 - 2016 年 12 月 31 日

下表顯示了欄位名稱、其值格式和說明。

| 索引 | 特徵 | 格式 | 說明 |

|---|---|---|---|

| 1 | 日期時間 | 01.01.2009 00:10:00 | 日期時間參考 |

| 2 | p (毫巴) | 996.52 | 帕斯卡是國際單位制導出的壓力單位,用於量化內部壓力。氣象報告通常以毫巴表示大氣壓力。 |

| 3 | T (攝氏度) | -8.02 | 攝氏溫度 |

| 4 | Tpot (K) | 265.4 | 克耳文溫度 |

| 5 | Tdew (攝氏度) | -8.9 | 相對於濕度的攝氏溫度。露點是空氣中絕對水量的量度,露點是空氣無法容納所有水分且水凝結的溫度。 |

| 6 | rh (%) | 93.3 | 相對濕度是衡量空氣中水蒸氣飽和度的指標,%RH 決定收集物體內含水量。 |

| 7 | VPmax (毫巴) | 3.33 | 飽和蒸氣壓 |

| 8 | VPact (毫巴) | 3.11 | 蒸氣壓 |

| 9 | VPdef (毫巴) | 0.22 | 蒸氣壓差 |

| 10 | sh (公克/公斤) | 1.94 | 比濕度 |

| 11 | H2OC (毫莫耳/莫耳) | 3.12 | 水蒸氣濃度 |

| 12 | rho (公克/立方公尺) | 1307.75 | 空氣密度 |

| 13 | wv (公尺/秒) | 1.03 | 風速 |

| 14 | 最大風速 (公尺/秒) | 1.75 | 最大風速 |

| 15 | wd (度) | 152.3 | 風向(度) |

from zipfile import ZipFile

uri = "https://storage.googleapis.com/tensorflow/tf-keras-datasets/jena_climate_2009_2016.csv.zip"

zip_path = keras.utils.get_file(origin=uri, fname="jena_climate_2009_2016.csv.zip")

zip_file = ZipFile(zip_path)

zip_file.extractall()

csv_path = "jena_climate_2009_2016.csv"

df = pd.read_csv(csv_path)

原始資料視覺化

為了讓我們了解正在處理的資料,下方繪製了每個特徵的圖表。這顯示了 2009 年至 2016 年期間每個特徵的獨特模式。它還顯示了異常值出現的位置,這些異常值將在正規化期間解決。

titles = [

"Pressure",

"Temperature",

"Temperature in Kelvin",

"Temperature (dew point)",

"Relative Humidity",

"Saturation vapor pressure",

"Vapor pressure",

"Vapor pressure deficit",

"Specific humidity",

"Water vapor concentration",

"Airtight",

"Wind speed",

"Maximum wind speed",

"Wind direction in degrees",

]

feature_keys = [

"p (mbar)",

"T (degC)",

"Tpot (K)",

"Tdew (degC)",

"rh (%)",

"VPmax (mbar)",

"VPact (mbar)",

"VPdef (mbar)",

"sh (g/kg)",

"H2OC (mmol/mol)",

"rho (g/m**3)",

"wv (m/s)",

"max. wv (m/s)",

"wd (deg)",

]

colors = [

"blue",

"orange",

"green",

"red",

"purple",

"brown",

"pink",

"gray",

"olive",

"cyan",

]

date_time_key = "Date Time"

def show_raw_visualization(data):

time_data = data[date_time_key]

fig, axes = plt.subplots(

nrows=7, ncols=2, figsize=(15, 20), dpi=80, facecolor="w", edgecolor="k"

)

for i in range(len(feature_keys)):

key = feature_keys[i]

c = colors[i % (len(colors))]

t_data = data[key]

t_data.index = time_data

t_data.head()

ax = t_data.plot(

ax=axes[i // 2, i % 2],

color=c,

title="{} - {}".format(titles[i], key),

rot=25,

)

ax.legend([titles[i]])

plt.tight_layout()

show_raw_visualization(df)

資料前處理

在這裡,我們選取約 300,000 個資料點進行訓練。每 10 分鐘記錄一次觀測值,這表示每小時 6 次。由於預計 60 分鐘內不會發生劇烈變化,我們將每小時重新取樣一個點。我們透過 timeseries_dataset_from_array 工具中的 sampling_rate 參數來完成此操作。

我們正在追蹤過去 720 個時間戳記(720/6=120 小時)的資料。這些資料將用於預測 72 個時間戳記(72/6=12 小時)後的溫度。

由於每個特徵的值範圍各不相同,我們在訓練神經網路之前進行正規化,將特徵值限制在 [0, 1] 範圍內。我們透過減去每個特徵的平均值並除以標準差來完成此操作。

71.5% 的資料將用於訓練模型,即 300,693 列。可以更改 split_fraction 以調整此百分比。

模型顯示前 5 天的資料,即每小時取樣的 720 個觀測值。72 個觀測值(12 小時 * 每小時 6 個觀測值)後的溫度將用作標籤。

split_fraction = 0.715

train_split = int(split_fraction * int(df.shape[0]))

step = 6

past = 720

future = 72

learning_rate = 0.001

batch_size = 256

epochs = 10

def normalize(data, train_split):

data_mean = data[:train_split].mean(axis=0)

data_std = data[:train_split].std(axis=0)

return (data - data_mean) / data_std

從相關性熱圖中可以看出,相對濕度和比濕度等少數參數是多餘的。因此,我們將使用選定的特徵,而不是全部。

print(

"The selected parameters are:",

", ".join([titles[i] for i in [0, 1, 5, 7, 8, 10, 11]]),

)

selected_features = [feature_keys[i] for i in [0, 1, 5, 7, 8, 10, 11]]

features = df[selected_features]

features.index = df[date_time_key]

features.head()

features = normalize(features.values, train_split)

features = pd.DataFrame(features)

features.head()

train_data = features.loc[0 : train_split - 1]

val_data = features.loc[train_split:]

The selected parameters are: Pressure, Temperature, Saturation vapor pressure, Vapor pressure deficit, Specific humidity, Airtight, Wind speed

訓練資料集

訓練資料集標籤從第 792 個觀測值(720 + 72)開始。

start = past + future

end = start + train_split

x_train = train_data[[i for i in range(7)]].values

y_train = features.iloc[start:end][[1]]

sequence_length = int(past / step)

timeseries_dataset_from_array 函數接收以相等間隔收集的資料點序列,以及時間序列參數,例如序列/視窗的長度、兩個序列/視窗之間的間距等,以產生從主時間序列取樣的子時間序列輸入和目標批次。

dataset_train = keras.preprocessing.timeseries_dataset_from_array(

x_train,

y_train,

sequence_length=sequence_length,

sampling_rate=step,

batch_size=batch_size,

)

驗證資料集

驗證資料集不得包含最後 792 列,因為我們沒有這些記錄的標籤資料,因此必須從資料末尾減去 792。

驗證標籤資料集必須從 train_split 後的 792 開始,因此我們必須將過去 + 未來 (792) 加到 label_start。

x_end = len(val_data) - past - future

label_start = train_split + past + future

x_val = val_data.iloc[:x_end][[i for i in range(7)]].values

y_val = features.iloc[label_start:][[1]]

dataset_val = keras.preprocessing.timeseries_dataset_from_array(

x_val,

y_val,

sequence_length=sequence_length,

sampling_rate=step,

batch_size=batch_size,

)

for batch in dataset_train.take(1):

inputs, targets = batch

print("Input shape:", inputs.numpy().shape)

print("Target shape:", targets.numpy().shape)

Input shape: (256, 120, 7)

Target shape: (256, 1)

訓練

inputs = keras.layers.Input(shape=(inputs.shape[1], inputs.shape[2]))

lstm_out = keras.layers.LSTM(32)(inputs)

outputs = keras.layers.Dense(1)(lstm_out)

model = keras.Model(inputs=inputs, outputs=outputs)

model.compile(optimizer=keras.optimizers.Adam(learning_rate=learning_rate), loss="mse")

model.summary()

CUDA backend failed to initialize: Found cuSOLVER version 11405, but JAX was built against version 11502, which is newer. The copy of cuSOLVER that is installed must be at least as new as the version against which JAX was built. (Set TF_CPP_MIN_LOG_LEVEL=0 and rerun for more info.)

Model: "functional_1"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━┩ │ input_layer (InputLayer) │ (None, 120, 7) │ 0 │ ├─────────────────────────────────┼───────────────────────────┼────────────┤ │ lstm (LSTM) │ (None, 32) │ 5,120 │ ├─────────────────────────────────┼───────────────────────────┼────────────┤ │ dense (Dense) │ (None, 1) │ 33 │ └─────────────────────────────────┴───────────────────────────┴────────────┘

Total params: 5,153 (20.13 KB)

Trainable params: 5,153 (20.13 KB)

Non-trainable params: 0 (0.00 B)

我們將使用 ModelCheckpoint 回呼定期儲存檢查點,並使用 EarlyStopping 回呼在驗證損失不再改善時中斷訓練。

path_checkpoint = "model_checkpoint.weights.h5"

es_callback = keras.callbacks.EarlyStopping(monitor="val_loss", min_delta=0, patience=5)

modelckpt_callback = keras.callbacks.ModelCheckpoint(

monitor="val_loss",

filepath=path_checkpoint,

verbose=1,

save_weights_only=True,

save_best_only=True,

)

history = model.fit(

dataset_train,

epochs=epochs,

validation_data=dataset_val,

callbacks=[es_callback, modelckpt_callback],

)

Epoch 1/10

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 0s 70ms/step - loss: 0.3008

Epoch 1: val_loss improved from inf to 0.15039, saving model to model_checkpoint.weights.h5

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 104s 88ms/step - loss: 0.3007 - val_loss: 0.1504

Epoch 2/10

1171/1172 ━━━━━━━━━━━━━━━━━━━[37m━ 0s 66ms/step - loss: 0.1397

Epoch 2: val_loss improved from 0.15039 to 0.14231, saving model to model_checkpoint.weights.h5

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 97s 83ms/step - loss: 0.1396 - val_loss: 0.1423

Epoch 3/10

1171/1172 ━━━━━━━━━━━━━━━━━━━[37m━ 0s 69ms/step - loss: 0.1242

Epoch 3: val_loss did not improve from 0.14231

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 101s 86ms/step - loss: 0.1242 - val_loss: 0.1513

Epoch 4/10

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 0s 68ms/step - loss: 0.1182

Epoch 4: val_loss did not improve from 0.14231

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 102s 87ms/step - loss: 0.1182 - val_loss: 0.1503

Epoch 5/10

1171/1172 ━━━━━━━━━━━━━━━━━━━[37m━ 0s 67ms/step - loss: 0.1160

Epoch 5: val_loss did not improve from 0.14231

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 100s 85ms/step - loss: 0.1160 - val_loss: 0.1500

Epoch 6/10

1171/1172 ━━━━━━━━━━━━━━━━━━━[37m━ 0s 69ms/step - loss: 0.1130

Epoch 6: val_loss did not improve from 0.14231

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 100s 86ms/step - loss: 0.1130 - val_loss: 0.1469

Epoch 7/10

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 0s 70ms/step - loss: 0.1106

Epoch 7: val_loss improved from 0.14231 to 0.13916, saving model to model_checkpoint.weights.h5

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 104s 89ms/step - loss: 0.1106 - val_loss: 0.1392

Epoch 8/10

1171/1172 ━━━━━━━━━━━━━━━━━━━[37m━ 0s 66ms/step - loss: 0.1097

Epoch 8: val_loss improved from 0.13916 to 0.13257, saving model to model_checkpoint.weights.h5

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 98s 84ms/step - loss: 0.1097 - val_loss: 0.1326

Epoch 9/10

1171/1172 ━━━━━━━━━━━━━━━━━━━[37m━ 0s 68ms/step - loss: 0.1075

Epoch 9: val_loss improved from 0.13257 to 0.13057, saving model to model_checkpoint.weights.h5

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 100s 85ms/step - loss: 0.1075 - val_loss: 0.1306

Epoch 10/10

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 0s 66ms/step - loss: 0.1065

Epoch 10: val_loss improved from 0.13057 to 0.12671, saving model to model_checkpoint.weights.h5

1172/1172 ━━━━━━━━━━━━━━━━━━━━ 98s 84ms/step - loss: 0.1065 - val_loss: 0.1267

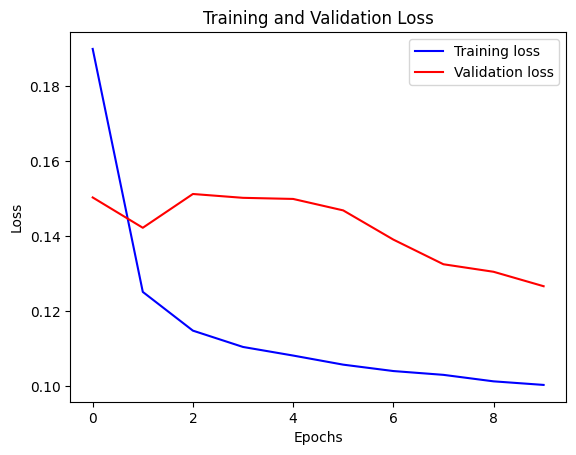

我們可以使用下面的函數視覺化損失。在某個點之後,損失停止減少。

def visualize_loss(history, title):

loss = history.history["loss"]

val_loss = history.history["val_loss"]

epochs = range(len(loss))

plt.figure()

plt.plot(epochs, loss, "b", label="Training loss")

plt.plot(epochs, val_loss, "r", label="Validation loss")

plt.title(title)

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.legend()

plt.show()

visualize_loss(history, "Training and Validation Loss")

預測

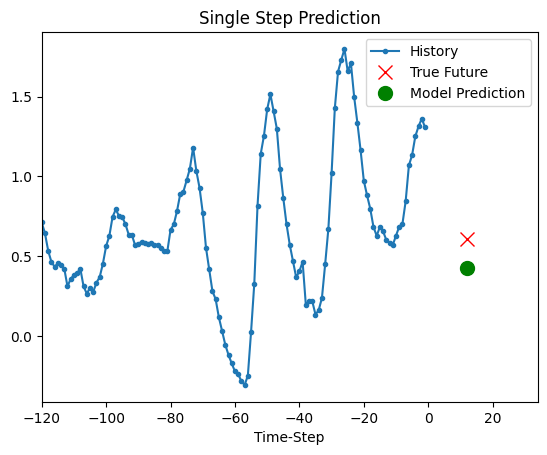

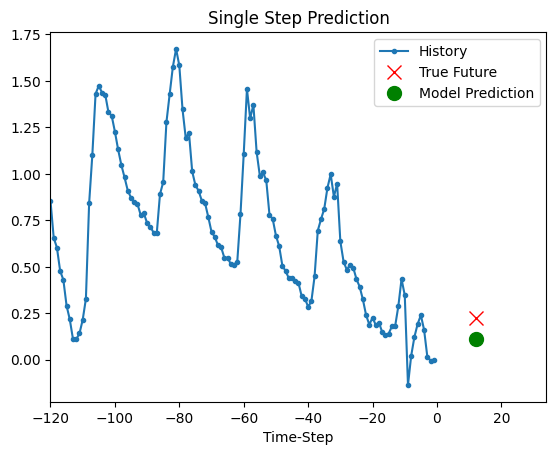

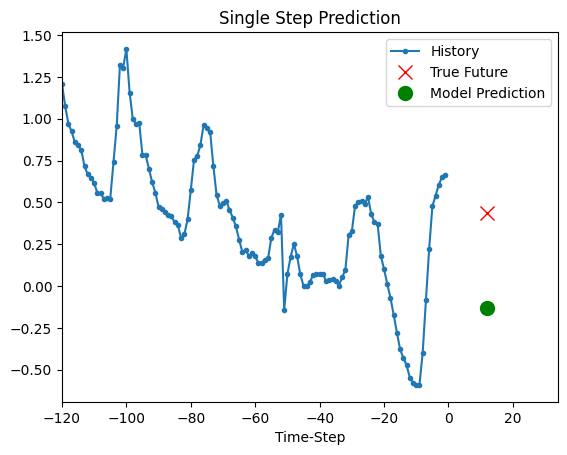

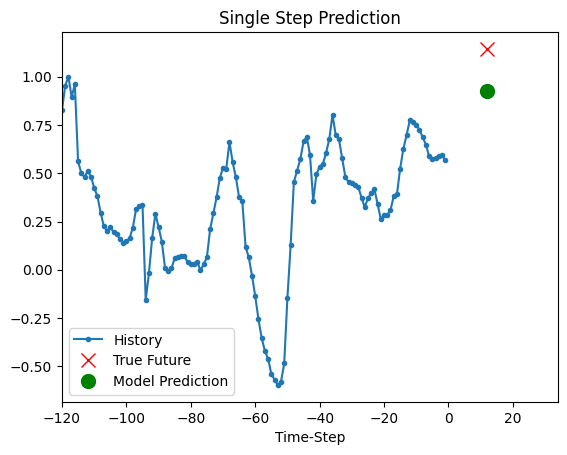

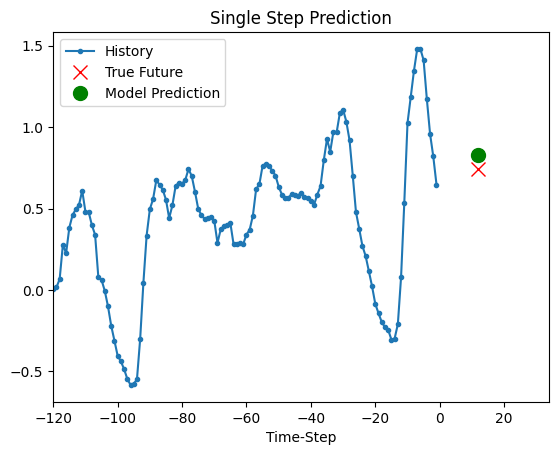

上面訓練的模型現在能夠對驗證集中的 5 組值進行預測。

def show_plot(plot_data, delta, title):

labels = ["History", "True Future", "Model Prediction"]

marker = [".-", "rx", "go"]

time_steps = list(range(-(plot_data[0].shape[0]), 0))

if delta:

future = delta

else:

future = 0

plt.title(title)

for i, val in enumerate(plot_data):

if i:

plt.plot(future, plot_data[i], marker[i], markersize=10, label=labels[i])

else:

plt.plot(time_steps, plot_data[i].flatten(), marker[i], label=labels[i])

plt.legend()

plt.xlim([time_steps[0], (future + 5) * 2])

plt.xlabel("Time-Step")

plt.show()

return

for x, y in dataset_val.take(5):

show_plot(

[x[0][:, 1].numpy(), y[0].numpy(), model.predict(x)[0]],

12,

"Single Step Prediction",

)

8/8 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step

8/8 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step

8/8 ━━━━━━━━━━━━━━━━━━━━ 0s 5ms/step

8/8 ━━━━━━━━━━━━━━━━━━━━ 0s 5ms/step

8/8 ━━━━━━━━━━━━━━━━━━━━ 0s 4ms/step