向量量化變分自動編碼器

作者: Sayak Paul

建立日期 2021/07/21

上次修改日期 2021/06/27

說明: 訓練 VQ-VAE 以進行圖像重建和用於生成的碼本採樣。

在此範例中,我們開發了一個向量量化變分自動編碼器 (VQ-VAE)。VQ-VAE 由 van der Oord 等人在 Neural Discrete Representation Learning 中提出。在標準 VAE 中,潛在空間是連續的,並從高斯分佈中採樣。通常很難透過梯度下降來學習這種連續分佈。另一方面,VQ-VAE 在離散潛在空間上運作,使最佳化問題更簡單。它透過維護一個離散的碼本來做到這一點。碼本是透過離散化連續嵌入與編碼輸出之間的距離來開發的。然後將這些離散碼字饋送到解碼器,解碼器經過訓練以生成重建的樣本。

如需 VQ-VAE 的概述,請參閱原始論文和此影片說明。如果您需要複習 VAE,您可以參考此書章節。VQ-VAE 是 DALL-E 背後的主要方法之一,而碼本的概念則用於 VQ-GAN 中。

此範例使用 DeepMind 官方 VQ-VAE 教學的實作細節。

要求

若要執行此範例,您需要 TensorFlow 2.5 或更高版本,以及 TensorFlow Probability,可以使用以下命令安裝。

!pip install -q tensorflow-probability

匯入

import numpy as np

import matplotlib.pyplot as plt

from tensorflow import keras

from tensorflow.keras import layers

import tensorflow_probability as tfp

import tensorflow as tf

VectorQuantizer 層

首先,我們實作一個用於向量量化器的自訂層,該層位於編碼器和解碼器之間。考量來自編碼器的輸出,其形狀為 (batch_size, height, width, num_filters)。向量量化器會先將此輸出展平,僅保留 num_filters 維度。因此,形狀會變成 (batch_size * height * width, num_filters)。此背後的原理是將篩選器總數視為潛在嵌入的大小。

然後會初始化一個嵌入表,以學習碼本。我們測量扁平化的編碼器輸出與此碼本的碼字之間的 L2 標準化距離。我們取得產生最小距離的碼,並應用 one-hot 編碼來實現量化。這樣,將產生對應編碼器輸出最小距離的碼映射為 1,而其餘的碼映射為零。

由於量化過程不可微分,因此我們在解碼器和編碼器之間應用直通估計器,以便解碼器梯度直接傳播到編碼器。由於編碼器和解碼器共用相同的通道空間,因此解碼器梯度對於編碼器仍然有意義。

class VectorQuantizer(layers.Layer):

def __init__(self, num_embeddings, embedding_dim, beta=0.25, **kwargs):

super().__init__(**kwargs)

self.embedding_dim = embedding_dim

self.num_embeddings = num_embeddings

# The `beta` parameter is best kept between [0.25, 2] as per the paper.

self.beta = beta

# Initialize the embeddings which we will quantize.

w_init = tf.random_uniform_initializer()

self.embeddings = tf.Variable(

initial_value=w_init(

shape=(self.embedding_dim, self.num_embeddings), dtype="float32"

),

trainable=True,

name="embeddings_vqvae",

)

def call(self, x):

# Calculate the input shape of the inputs and

# then flatten the inputs keeping `embedding_dim` intact.

input_shape = tf.shape(x)

flattened = tf.reshape(x, [-1, self.embedding_dim])

# Quantization.

encoding_indices = self.get_code_indices(flattened)

encodings = tf.one_hot(encoding_indices, self.num_embeddings)

quantized = tf.matmul(encodings, self.embeddings, transpose_b=True)

# Reshape the quantized values back to the original input shape

quantized = tf.reshape(quantized, input_shape)

# Calculate vector quantization loss and add that to the layer. You can learn more

# about adding losses to different layers here:

# https://keras.dev.org.tw/guides/making_new_layers_and_models_via_subclassing/. Check

# the original paper to get a handle on the formulation of the loss function.

commitment_loss = tf.reduce_mean((tf.stop_gradient(quantized) - x) ** 2)

codebook_loss = tf.reduce_mean((quantized - tf.stop_gradient(x)) ** 2)

self.add_loss(self.beta * commitment_loss + codebook_loss)

# Straight-through estimator.

quantized = x + tf.stop_gradient(quantized - x)

return quantized

def get_code_indices(self, flattened_inputs):

# Calculate L2-normalized distance between the inputs and the codes.

similarity = tf.matmul(flattened_inputs, self.embeddings)

distances = (

tf.reduce_sum(flattened_inputs ** 2, axis=1, keepdims=True)

+ tf.reduce_sum(self.embeddings ** 2, axis=0)

- 2 * similarity

)

# Derive the indices for minimum distances.

encoding_indices = tf.argmin(distances, axis=1)

return encoding_indices

關於直通估計的注意事項:

這行程式碼執行直通估計部分:quantized = x + tf.stop_gradient(quantized - x)。在反向傳播期間,(quantized - x) 不會包含在計算圖中,並且為 quantized 取得的梯度會複製到 inputs。感謝此影片幫助我理解此技術。

編碼器和解碼器

現在說明 VQ-VAE 的編碼器和解碼器。我們會讓它們保持小巧,使其容量非常適合 MNIST 資料集。編碼器和解碼器的實作來自此範例。

請注意,ReLU 以外的啟用可能不適用於量化架構中的編碼器和解碼器層:例如,啟用 Leaky ReLU 的層已被證明難以訓練,導致模型難以恢復的間歇性損失峰值。

def get_encoder(latent_dim=16):

encoder_inputs = keras.Input(shape=(28, 28, 1))

x = layers.Conv2D(32, 3, activation="relu", strides=2, padding="same")(

encoder_inputs

)

x = layers.Conv2D(64, 3, activation="relu", strides=2, padding="same")(x)

encoder_outputs = layers.Conv2D(latent_dim, 1, padding="same")(x)

return keras.Model(encoder_inputs, encoder_outputs, name="encoder")

def get_decoder(latent_dim=16):

latent_inputs = keras.Input(shape=get_encoder(latent_dim).output.shape[1:])

x = layers.Conv2DTranspose(64, 3, activation="relu", strides=2, padding="same")(

latent_inputs

)

x = layers.Conv2DTranspose(32, 3, activation="relu", strides=2, padding="same")(x)

decoder_outputs = layers.Conv2DTranspose(1, 3, padding="same")(x)

return keras.Model(latent_inputs, decoder_outputs, name="decoder")

獨立 VQ-VAE 模型

def get_vqvae(latent_dim=16, num_embeddings=64):

vq_layer = VectorQuantizer(num_embeddings, latent_dim, name="vector_quantizer")

encoder = get_encoder(latent_dim)

decoder = get_decoder(latent_dim)

inputs = keras.Input(shape=(28, 28, 1))

encoder_outputs = encoder(inputs)

quantized_latents = vq_layer(encoder_outputs)

reconstructions = decoder(quantized_latents)

return keras.Model(inputs, reconstructions, name="vq_vae")

get_vqvae().summary()

Model: "vq_vae"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_4 (InputLayer) [(None, 28, 28, 1)] 0

_________________________________________________________________

encoder (Functional) (None, 7, 7, 16) 19856

_________________________________________________________________

vector_quantizer (VectorQuan (None, 7, 7, 16) 1024

_________________________________________________________________

decoder (Functional) (None, 28, 28, 1) 28033

=================================================================

Total params: 48,913

Trainable params: 48,913

Non-trainable params: 0

_________________________________________________________________

請注意,編碼器的輸出通道應與向量量化器的 latent_dim 相符。

將訓練迴圈包裝在 VQVAETrainer 內

class VQVAETrainer(keras.models.Model):

def __init__(self, train_variance, latent_dim=32, num_embeddings=128, **kwargs):

super().__init__(**kwargs)

self.train_variance = train_variance

self.latent_dim = latent_dim

self.num_embeddings = num_embeddings

self.vqvae = get_vqvae(self.latent_dim, self.num_embeddings)

self.total_loss_tracker = keras.metrics.Mean(name="total_loss")

self.reconstruction_loss_tracker = keras.metrics.Mean(

name="reconstruction_loss"

)

self.vq_loss_tracker = keras.metrics.Mean(name="vq_loss")

@property

def metrics(self):

return [

self.total_loss_tracker,

self.reconstruction_loss_tracker,

self.vq_loss_tracker,

]

def train_step(self, x):

with tf.GradientTape() as tape:

# Outputs from the VQ-VAE.

reconstructions = self.vqvae(x)

# Calculate the losses.

reconstruction_loss = (

tf.reduce_mean((x - reconstructions) ** 2) / self.train_variance

)

total_loss = reconstruction_loss + sum(self.vqvae.losses)

# Backpropagation.

grads = tape.gradient(total_loss, self.vqvae.trainable_variables)

self.optimizer.apply_gradients(zip(grads, self.vqvae.trainable_variables))

# Loss tracking.

self.total_loss_tracker.update_state(total_loss)

self.reconstruction_loss_tracker.update_state(reconstruction_loss)

self.vq_loss_tracker.update_state(sum(self.vqvae.losses))

# Log results.

return {

"loss": self.total_loss_tracker.result(),

"reconstruction_loss": self.reconstruction_loss_tracker.result(),

"vqvae_loss": self.vq_loss_tracker.result(),

}

載入和預處理 MNIST 資料集

(x_train, _), (x_test, _) = keras.datasets.mnist.load_data()

x_train = np.expand_dims(x_train, -1)

x_test = np.expand_dims(x_test, -1)

x_train_scaled = (x_train / 255.0) - 0.5

x_test_scaled = (x_test / 255.0) - 0.5

data_variance = np.var(x_train / 255.0)

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz

11493376/11490434 [==============================] - 0s 0us/step

訓練 VQ-VAE 模型

vqvae_trainer = VQVAETrainer(data_variance, latent_dim=16, num_embeddings=128)

vqvae_trainer.compile(optimizer=keras.optimizers.Adam())

vqvae_trainer.fit(x_train_scaled, epochs=30, batch_size=128)

Epoch 1/30

469/469 [==============================] - 18s 6ms/step - loss: 2.2962 - reconstruction_loss: 0.3869 - vqvae_loss: 1.5950

Epoch 2/30

469/469 [==============================] - 3s 6ms/step - loss: 2.2980 - reconstruction_loss: 0.1692 - vqvae_loss: 2.1108

Epoch 3/30

469/469 [==============================] - 3s 6ms/step - loss: 1.1356 - reconstruction_loss: 0.1281 - vqvae_loss: 0.9997

Epoch 4/30

469/469 [==============================] - 3s 6ms/step - loss: 0.6112 - reconstruction_loss: 0.1030 - vqvae_loss: 0.5031

Epoch 5/30

469/469 [==============================] - 3s 6ms/step - loss: 0.4375 - reconstruction_loss: 0.0883 - vqvae_loss: 0.3464

Epoch 6/30

469/469 [==============================] - 3s 6ms/step - loss: 0.3579 - reconstruction_loss: 0.0788 - vqvae_loss: 0.2775

Epoch 7/30

469/469 [==============================] - 3s 5ms/step - loss: 0.3197 - reconstruction_loss: 0.0725 - vqvae_loss: 0.2457

Epoch 8/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2960 - reconstruction_loss: 0.0673 - vqvae_loss: 0.2277

Epoch 9/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2798 - reconstruction_loss: 0.0640 - vqvae_loss: 0.2152

Epoch 10/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2681 - reconstruction_loss: 0.0612 - vqvae_loss: 0.2061

Epoch 11/30

469/469 [==============================] - 3s 6ms/step - loss: 0.2578 - reconstruction_loss: 0.0590 - vqvae_loss: 0.1986

Epoch 12/30

469/469 [==============================] - 3s 6ms/step - loss: 0.2551 - reconstruction_loss: 0.0574 - vqvae_loss: 0.1974

Epoch 13/30

469/469 [==============================] - 3s 6ms/step - loss: 0.2526 - reconstruction_loss: 0.0560 - vqvae_loss: 0.1961

Epoch 14/30

469/469 [==============================] - 3s 6ms/step - loss: 0.2485 - reconstruction_loss: 0.0546 - vqvae_loss: 0.1936

Epoch 15/30

469/469 [==============================] - 3s 6ms/step - loss: 0.2462 - reconstruction_loss: 0.0533 - vqvae_loss: 0.1926

Epoch 16/30

469/469 [==============================] - 3s 6ms/step - loss: 0.2445 - reconstruction_loss: 0.0523 - vqvae_loss: 0.1920

Epoch 17/30

469/469 [==============================] - 3s 6ms/step - loss: 0.2427 - reconstruction_loss: 0.0515 - vqvae_loss: 0.1911

Epoch 18/30

469/469 [==============================] - 3s 6ms/step - loss: 0.2405 - reconstruction_loss: 0.0505 - vqvae_loss: 0.1898

Epoch 19/30

469/469 [==============================] - 3s 6ms/step - loss: 0.2368 - reconstruction_loss: 0.0495 - vqvae_loss: 0.1871

Epoch 20/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2310 - reconstruction_loss: 0.0486 - vqvae_loss: 0.1822

Epoch 21/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2245 - reconstruction_loss: 0.0475 - vqvae_loss: 0.1769

Epoch 22/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2205 - reconstruction_loss: 0.0469 - vqvae_loss: 0.1736

Epoch 23/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2195 - reconstruction_loss: 0.0465 - vqvae_loss: 0.1730

Epoch 24/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2187 - reconstruction_loss: 0.0461 - vqvae_loss: 0.1726

Epoch 25/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2180 - reconstruction_loss: 0.0458 - vqvae_loss: 0.1721

Epoch 26/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2163 - reconstruction_loss: 0.0454 - vqvae_loss: 0.1709

Epoch 27/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2156 - reconstruction_loss: 0.0452 - vqvae_loss: 0.1704

Epoch 28/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2146 - reconstruction_loss: 0.0449 - vqvae_loss: 0.1696

Epoch 29/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2139 - reconstruction_loss: 0.0447 - vqvae_loss: 0.1692

Epoch 30/30

469/469 [==============================] - 3s 5ms/step - loss: 0.2127 - reconstruction_loss: 0.0444 - vqvae_loss: 0.1682

<tensorflow.python.keras.callbacks.History at 0x7f96402f4e50>

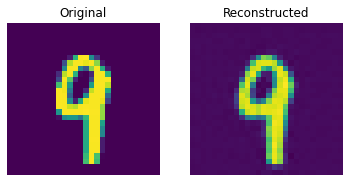

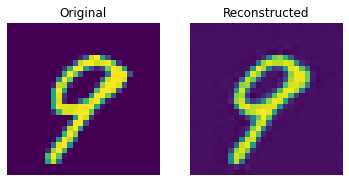

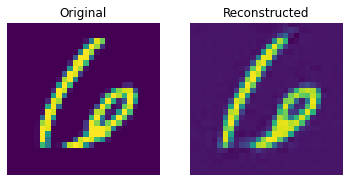

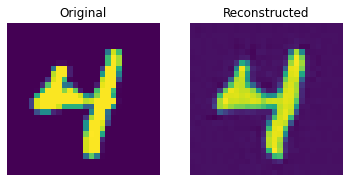

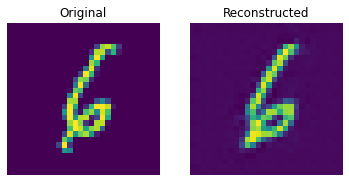

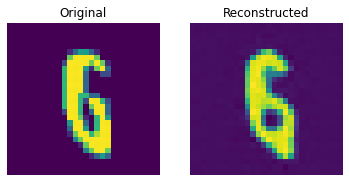

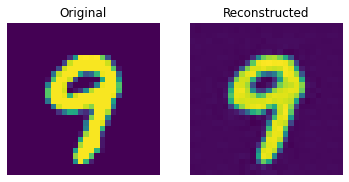

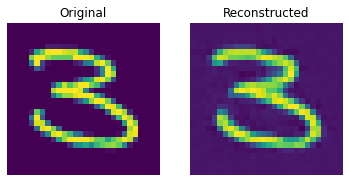

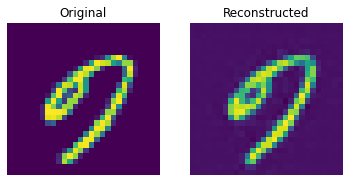

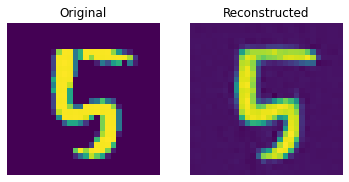

測試集上的重建結果

def show_subplot(original, reconstructed):

plt.subplot(1, 2, 1)

plt.imshow(original.squeeze() + 0.5)

plt.title("Original")

plt.axis("off")

plt.subplot(1, 2, 2)

plt.imshow(reconstructed.squeeze() + 0.5)

plt.title("Reconstructed")

plt.axis("off")

plt.show()

trained_vqvae_model = vqvae_trainer.vqvae

idx = np.random.choice(len(x_test_scaled), 10)

test_images = x_test_scaled[idx]

reconstructions_test = trained_vqvae_model.predict(test_images)

for test_image, reconstructed_image in zip(test_images, reconstructions_test):

show_subplot(test_image, reconstructed_image)

這些結果看起來還不錯。建議您使用不同的超參數(尤其是嵌入的數量和嵌入的維度)來觀察它們如何影響結果。

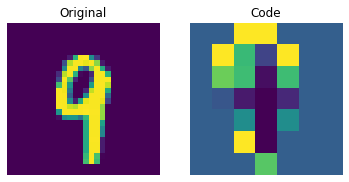

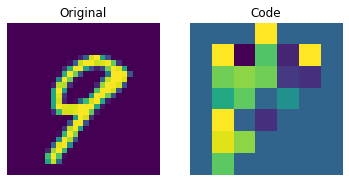

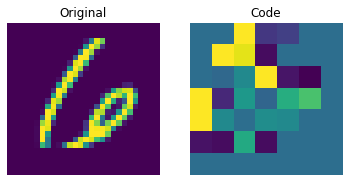

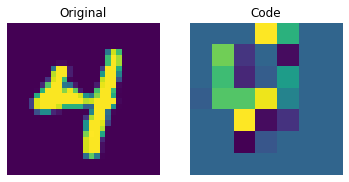

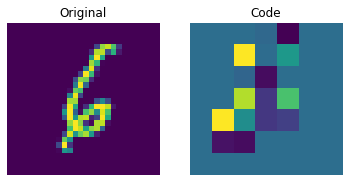

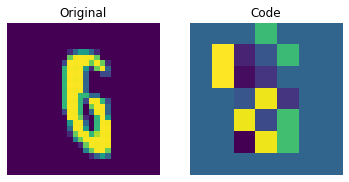

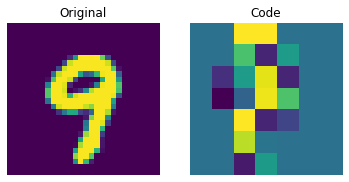

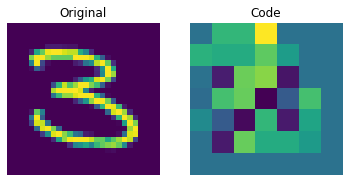

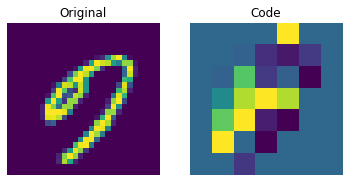

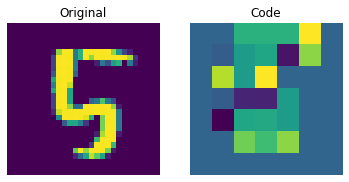

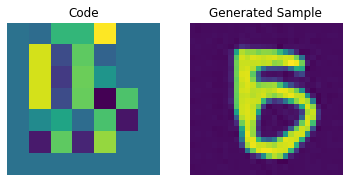

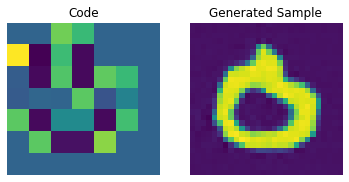

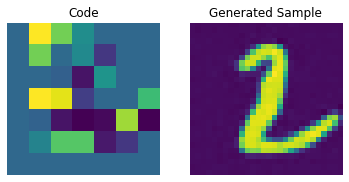

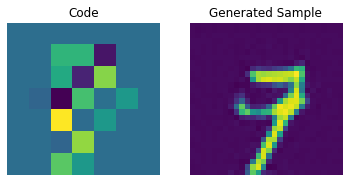

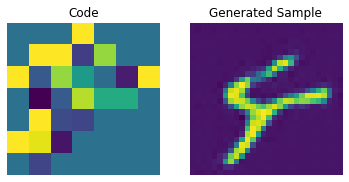

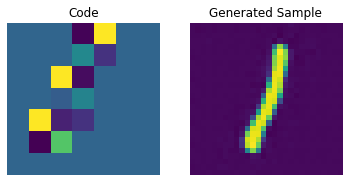

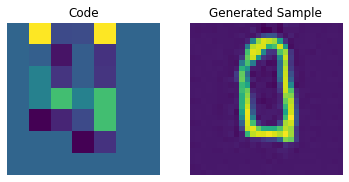

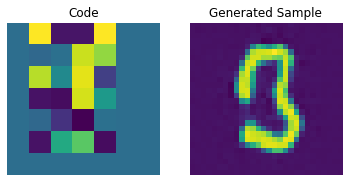

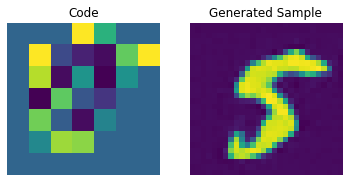

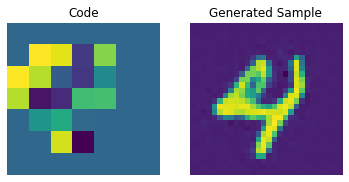

視覺化離散碼

encoder = vqvae_trainer.vqvae.get_layer("encoder")

quantizer = vqvae_trainer.vqvae.get_layer("vector_quantizer")

encoded_outputs = encoder.predict(test_images)

flat_enc_outputs = encoded_outputs.reshape(-1, encoded_outputs.shape[-1])

codebook_indices = quantizer.get_code_indices(flat_enc_outputs)

codebook_indices = codebook_indices.numpy().reshape(encoded_outputs.shape[:-1])

for i in range(len(test_images)):

plt.subplot(1, 2, 1)

plt.imshow(test_images[i].squeeze() + 0.5)

plt.title("Original")

plt.axis("off")

plt.subplot(1, 2, 2)

plt.imshow(codebook_indices[i])

plt.title("Code")

plt.axis("off")

plt.show()

上圖顯示離散碼已能夠從資料集中擷取一些規律性。現在,我們如何從這個碼本中取樣以建立新的影像?由於這些碼是離散的,並且我們對它們施加了類別分佈,因此在我們可以產生可以提供給解碼器的可能碼序列之前,我們還不能使用它們來產生任何有意義的內容。作者使用 PixelCNN 來訓練這些碼,以便它們可以用作產生新範例的強大先驗。PixelCNN 由 van der Oord 等人在 Conditional Image Generation with PixelCNN Decoders 中提出。我們將從 van der Oord 等人的 此範例借用程式碼。我們從 此 PixelCNN 範例借用實作。它是一種自迴歸生成模型,其中輸出以先前的輸出為條件。換句話說,PixelCNN 會逐像素產生影像。然而,對於此範例中的目的而言,它的任務是產生碼本索引,而不是直接產生像素。訓練的 VQ-VAE 解碼器用於將 PixelCNN 產生的索引映射回像素空間。

PixelCNN 超參數

num_residual_blocks = 2

num_pixelcnn_layers = 2

pixelcnn_input_shape = encoded_outputs.shape[1:-1]

print(f"Input shape of the PixelCNN: {pixelcnn_input_shape}")

Input shape of the PixelCNN: (7, 7)

此輸入形狀表示編碼器執行的解析度縮減。透過「相同」填充,此操作會將每個步幅為 2 的捲積層的輸出形狀的「解析度」完全減半。因此,透過這兩個圖層,我們最終會得到一個 7x7 的編碼器輸出張量(在軸 2 和 3 上),第一個軸作為批次大小,最後一個軸作為碼本嵌入大小。由於自動編碼器中的量化層會將這些 7x7 張量映射到碼本的索引,因此這些輸出層軸大小必須由 PixelCNN 作為輸入形狀進行匹配。此架構的 PixelCNN 的任務是產生可能的 7x7 碼本索引排列。

請注意,這個形狀是需要在較大尺寸的圖像領域中進行優化的,連同程式碼簿的大小。由於 PixelCNN 是自迴歸的,它需要依序遍歷每個程式碼簿索引,才能從程式碼簿中生成新的圖像。每個步幅為 2(更準確地說,是步幅 (2, 2))的卷積層會將圖像生成時間縮短四分之一。然而,請注意,這部分可能存在下限:當圖像重建的程式碼數量太少時,它沒有足夠的資訊讓解碼器表示圖像中的細節層次,因此輸出品質會受到影響。至少在某種程度上,可以透過使用較大的程式碼簿來修正這個問題。由於圖像生成程序的自迴歸部分使用程式碼簿索引,因此使用較大的程式碼簿在效能上的損失遠小於迭代較長程式碼簿索引序列所造成的損失,因為從較大程式碼簿中查找較大程式碼的時間要短得多,儘管程式碼簿的大小確實會影響可以通過圖像生成程序的批次大小。找到這種權衡的最佳點可能需要一些架構上的調整,並且很可能因資料集而異。

PixelCNN 模型

大部分程式碼來自這個範例。

注意事項

感謝 Rein van 't Veer 透過校對和微小的程式碼清理來改進這個範例。

# The first layer is the PixelCNN layer. This layer simply

# builds on the 2D convolutional layer, but includes masking.

class PixelConvLayer(layers.Layer):

def __init__(self, mask_type, **kwargs):

super().__init__()

self.mask_type = mask_type

self.conv = layers.Conv2D(**kwargs)

def build(self, input_shape):

# Build the conv2d layer to initialize kernel variables

self.conv.build(input_shape)

# Use the initialized kernel to create the mask

kernel_shape = self.conv.kernel.get_shape()

self.mask = np.zeros(shape=kernel_shape)

self.mask[: kernel_shape[0] // 2, ...] = 1.0

self.mask[kernel_shape[0] // 2, : kernel_shape[1] // 2, ...] = 1.0

if self.mask_type == "B":

self.mask[kernel_shape[0] // 2, kernel_shape[1] // 2, ...] = 1.0

def call(self, inputs):

self.conv.kernel.assign(self.conv.kernel * self.mask)

return self.conv(inputs)

# Next, we build our residual block layer.

# This is just a normal residual block, but based on the PixelConvLayer.

class ResidualBlock(keras.layers.Layer):

def __init__(self, filters, **kwargs):

super().__init__(**kwargs)

self.conv1 = keras.layers.Conv2D(

filters=filters, kernel_size=1, activation="relu"

)

self.pixel_conv = PixelConvLayer(

mask_type="B",

filters=filters // 2,

kernel_size=3,

activation="relu",

padding="same",

)

self.conv2 = keras.layers.Conv2D(

filters=filters, kernel_size=1, activation="relu"

)

def call(self, inputs):

x = self.conv1(inputs)

x = self.pixel_conv(x)

x = self.conv2(x)

return keras.layers.add([inputs, x])

pixelcnn_inputs = keras.Input(shape=pixelcnn_input_shape, dtype=tf.int32)

ohe = tf.one_hot(pixelcnn_inputs, vqvae_trainer.num_embeddings)

x = PixelConvLayer(

mask_type="A", filters=128, kernel_size=7, activation="relu", padding="same"

)(ohe)

for _ in range(num_residual_blocks):

x = ResidualBlock(filters=128)(x)

for _ in range(num_pixelcnn_layers):

x = PixelConvLayer(

mask_type="B",

filters=128,

kernel_size=1,

strides=1,

activation="relu",

padding="valid",

)(x)

out = keras.layers.Conv2D(

filters=vqvae_trainer.num_embeddings, kernel_size=1, strides=1, padding="valid"

)(x)

pixel_cnn = keras.Model(pixelcnn_inputs, out, name="pixel_cnn")

pixel_cnn.summary()

Model: "pixel_cnn"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_9 (InputLayer) [(None, 7, 7)] 0

_________________________________________________________________

tf.one_hot (TFOpLambda) (None, 7, 7, 128) 0

_________________________________________________________________

pixel_conv_layer (PixelConvL (None, 7, 7, 128) 802944

_________________________________________________________________

residual_block (ResidualBloc (None, 7, 7, 128) 98624

_________________________________________________________________

residual_block_1 (ResidualBl (None, 7, 7, 128) 98624

_________________________________________________________________

pixel_conv_layer_3 (PixelCon (None, 7, 7, 128) 16512

_________________________________________________________________

pixel_conv_layer_4 (PixelCon (None, 7, 7, 128) 16512

_________________________________________________________________

conv2d_21 (Conv2D) (None, 7, 7, 128) 16512

=================================================================

Total params: 1,049,728

Trainable params: 1,049,728

Non-trainable params: 0

_________________________________________________________________

準備資料以訓練 PixelCNN

我們將訓練 PixelCNN 來學習離散程式碼的類別分佈。首先,我們將使用我們剛訓練的編碼器和向量量化器來產生程式碼索引。我們的訓練目標將是最小化這些索引和 PixelCNN 輸出之間的交叉熵損失。這裡,類別的數量等於程式碼簿中存在的嵌入數量(在我們的例子中為 128)。PixelCNN 模型經過訓練以學習分佈(而不是最小化 L1/L2 損失),這也是它獲得生成能力的原因。

# Generate the codebook indices.

encoded_outputs = encoder.predict(x_train_scaled)

flat_enc_outputs = encoded_outputs.reshape(-1, encoded_outputs.shape[-1])

codebook_indices = quantizer.get_code_indices(flat_enc_outputs)

codebook_indices = codebook_indices.numpy().reshape(encoded_outputs.shape[:-1])

print(f"Shape of the training data for PixelCNN: {codebook_indices.shape}")

Shape of the training data for PixelCNN: (60000, 7, 7)

PixelCNN 訓練

pixel_cnn.compile(

optimizer=keras.optimizers.Adam(3e-4),

loss=keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=["accuracy"],

)

pixel_cnn.fit(

x=codebook_indices,

y=codebook_indices,

batch_size=128,

epochs=30,

validation_split=0.1,

)

Epoch 1/30

422/422 [==============================] - 4s 8ms/step - loss: 1.8550 - accuracy: 0.5959 - val_loss: 1.3127 - val_accuracy: 0.6268

Epoch 2/30

422/422 [==============================] - 3s 7ms/step - loss: 1.2207 - accuracy: 0.6402 - val_loss: 1.1722 - val_accuracy: 0.6482

Epoch 3/30

422/422 [==============================] - 3s 7ms/step - loss: 1.1412 - accuracy: 0.6536 - val_loss: 1.1313 - val_accuracy: 0.6552

Epoch 4/30

422/422 [==============================] - 3s 7ms/step - loss: 1.1060 - accuracy: 0.6601 - val_loss: 1.1058 - val_accuracy: 0.6596

Epoch 5/30

422/422 [==============================] - 3s 7ms/step - loss: 1.0828 - accuracy: 0.6646 - val_loss: 1.1020 - val_accuracy: 0.6603

Epoch 6/30

422/422 [==============================] - 3s 7ms/step - loss: 1.0649 - accuracy: 0.6682 - val_loss: 1.0809 - val_accuracy: 0.6638

Epoch 7/30

422/422 [==============================] - 3s 7ms/step - loss: 1.0515 - accuracy: 0.6710 - val_loss: 1.0712 - val_accuracy: 0.6659

Epoch 8/30

422/422 [==============================] - 3s 7ms/step - loss: 1.0406 - accuracy: 0.6733 - val_loss: 1.0647 - val_accuracy: 0.6671

Epoch 9/30

422/422 [==============================] - 3s 7ms/step - loss: 1.0312 - accuracy: 0.6752 - val_loss: 1.0633 - val_accuracy: 0.6674

Epoch 10/30

422/422 [==============================] - 3s 7ms/step - loss: 1.0235 - accuracy: 0.6771 - val_loss: 1.0554 - val_accuracy: 0.6695

Epoch 11/30

422/422 [==============================] - 3s 7ms/step - loss: 1.0162 - accuracy: 0.6788 - val_loss: 1.0518 - val_accuracy: 0.6694

Epoch 12/30

422/422 [==============================] - 3s 7ms/step - loss: 1.0105 - accuracy: 0.6799 - val_loss: 1.0541 - val_accuracy: 0.6693

Epoch 13/30

422/422 [==============================] - 3s 7ms/step - loss: 1.0050 - accuracy: 0.6811 - val_loss: 1.0481 - val_accuracy: 0.6705

Epoch 14/30

422/422 [==============================] - 3s 7ms/step - loss: 1.0011 - accuracy: 0.6820 - val_loss: 1.0462 - val_accuracy: 0.6709

Epoch 15/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9964 - accuracy: 0.6831 - val_loss: 1.0459 - val_accuracy: 0.6709

Epoch 16/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9922 - accuracy: 0.6840 - val_loss: 1.0444 - val_accuracy: 0.6704

Epoch 17/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9884 - accuracy: 0.6848 - val_loss: 1.0405 - val_accuracy: 0.6725

Epoch 18/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9846 - accuracy: 0.6859 - val_loss: 1.0400 - val_accuracy: 0.6722

Epoch 19/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9822 - accuracy: 0.6864 - val_loss: 1.0394 - val_accuracy: 0.6728

Epoch 20/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9787 - accuracy: 0.6872 - val_loss: 1.0393 - val_accuracy: 0.6717

Epoch 21/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9761 - accuracy: 0.6878 - val_loss: 1.0398 - val_accuracy: 0.6725

Epoch 22/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9733 - accuracy: 0.6884 - val_loss: 1.0376 - val_accuracy: 0.6726

Epoch 23/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9708 - accuracy: 0.6890 - val_loss: 1.0352 - val_accuracy: 0.6732

Epoch 24/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9685 - accuracy: 0.6894 - val_loss: 1.0369 - val_accuracy: 0.6723

Epoch 25/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9660 - accuracy: 0.6901 - val_loss: 1.0384 - val_accuracy: 0.6733

Epoch 26/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9638 - accuracy: 0.6908 - val_loss: 1.0355 - val_accuracy: 0.6728

Epoch 27/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9619 - accuracy: 0.6912 - val_loss: 1.0325 - val_accuracy: 0.6739

Epoch 28/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9594 - accuracy: 0.6917 - val_loss: 1.0334 - val_accuracy: 0.6736

Epoch 29/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9582 - accuracy: 0.6920 - val_loss: 1.0366 - val_accuracy: 0.6733

Epoch 30/30

422/422 [==============================] - 3s 7ms/step - loss: 0.9561 - accuracy: 0.6926 - val_loss: 1.0336 - val_accuracy: 0.6728

<tensorflow.python.keras.callbacks.History at 0x7f95838ef750>

我們可以透過更多訓練和超參數調整來改善這些分數。

程式碼簿取樣

現在我們的 PixelCNN 已經訓練完成,我們可以從其輸出中取樣不同的程式碼,並將其傳遞給我們的解碼器以生成新的圖像。

# Create a mini sampler model.

inputs = layers.Input(shape=pixel_cnn.input_shape[1:])

outputs = pixel_cnn(inputs, training=False)

categorical_layer = tfp.layers.DistributionLambda(tfp.distributions.Categorical)

outputs = categorical_layer(outputs)

sampler = keras.Model(inputs, outputs)

我們現在建立一個先驗來生成圖像。在這裡,我們將生成 10 張圖像。

# Create an empty array of priors.

batch = 10

priors = np.zeros(shape=(batch,) + (pixel_cnn.input_shape)[1:])

batch, rows, cols = priors.shape

# Iterate over the priors because generation has to be done sequentially pixel by pixel.

for row in range(rows):

for col in range(cols):

# Feed the whole array and retrieving the pixel value probabilities for the next

# pixel.

probs = sampler.predict(priors)

# Use the probabilities to pick pixel values and append the values to the priors.

priors[:, row, col] = probs[:, row, col]

print(f"Prior shape: {priors.shape}")

Prior shape: (10, 7, 7)

我們現在可以使用我們的解碼器來生成圖像。

# Perform an embedding lookup.

pretrained_embeddings = quantizer.embeddings

priors_ohe = tf.one_hot(priors.astype("int32"), vqvae_trainer.num_embeddings).numpy()

quantized = tf.matmul(

priors_ohe.astype("float32"), pretrained_embeddings, transpose_b=True

)

quantized = tf.reshape(quantized, (-1, *(encoded_outputs.shape[1:])))

# Generate novel images.

decoder = vqvae_trainer.vqvae.get_layer("decoder")

generated_samples = decoder.predict(quantized)

for i in range(batch):

plt.subplot(1, 2, 1)

plt.imshow(priors[i])

plt.title("Code")

plt.axis("off")

plt.subplot(1, 2, 2)

plt.imshow(generated_samples[i].squeeze() + 0.5)

plt.title("Generated Sample")

plt.axis("off")

plt.show()

我們可以透過調整 PixelCNN 來增強這些生成樣本的品質。