使用 R-GCN 的 WGAN-GP 來生成小型分子圖

作者: akensert

建立日期 2021/06/30

上次修改日期 2021/06/30

描述: 使用 R-GCN 的 WGAN-GP 來生成新穎分子的完整實作。

簡介

在本教學中,我們實作了一個圖形生成模型,並使用它來生成新穎分子。

動機:開發新藥(分子)可能非常耗時且成本高昂。透過預測已知分子的屬性(例如溶解度、毒性、對目標蛋白的親和力等),使用深度學習模型可以減輕尋找良好候選藥物的負擔。由於可能的分子的數量非常龐大,我們搜尋/探索分子的空間僅占整個空間的一小部分。因此,實作可以學習生成新穎分子(否則永遠不會被探索)的生成模型是可取的。

參考資料(實作)

本教學的實作基於/啟發自 MolGAN 論文和 DeepChem 的 Basic MolGAN。

延伸閱讀(生成模型)

分子圖形生成模型的最新實作還包括 Mol-CycleGAN、GraphVAE 和 JT-VAE。有關生成對抗網路的更多資訊,請參閱 GAN、WGAN 和 WGAN-GP。

設定

安裝 RDKit

RDKit 是一組以 C++ 和 Python 編寫的化學資訊學和機器學習軟體。在本教學中,RDKit 用於方便有效地將 SMILES 轉換為分子物件,然後從這些物件中取得原子和鍵的集合。

SMILES 以 ASCII 字串的形式表示給定分子的結構。SMILES 字串是一種緊湊的編碼,對於較小的分子而言,它具有相對的人類可讀性。將分子編碼為字串可以減輕並促進給定分子的資料庫和/或網路搜尋。RDKit 使用演算法準確地將給定的 SMILES 轉換為分子物件,然後可以使用該物件來計算大量分子屬性/特徵。

請注意,RDKit 通常透過 Conda 安裝。但是,由於 rdkit_platform_wheels,現在(為了本教學)可以透過 pip 輕鬆安裝 rdkit,如下所示

pip -q install rdkit-pypi

為了方便視覺化分子物件,需要安裝 Pillow

pip -q install Pillow

匯入套件

from rdkit import Chem, RDLogger

from rdkit.Chem.Draw import IPythonConsole, MolsToGridImage

import numpy as np

import tensorflow as tf

from tensorflow import keras

RDLogger.DisableLog("rdApp.*")

資料集

本教學中使用的資料集是一個 量子力學資料集 (QM9),取自 MoleculeNet。雖然資料集附帶許多特徵和標籤欄位,但我們只會關注 SMILES 欄位。QM9 資料集是一個很好的第一個用於生成圖形的資料集,因為分子中發現的最大重(非氫)原子數只有九個。

csv_path = tf.keras.utils.get_file(

"qm9.csv", "https://deepchemdata.s3-us-west-1.amazonaws.com/datasets/qm9.csv"

)

data = []

with open(csv_path, "r") as f:

for line in f.readlines()[1:]:

data.append(line.split(",")[1])

# Let's look at a molecule of the dataset

smiles = data[1000]

print("SMILES:", smiles)

molecule = Chem.MolFromSmiles(smiles)

print("Num heavy atoms:", molecule.GetNumHeavyAtoms())

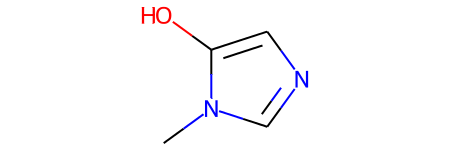

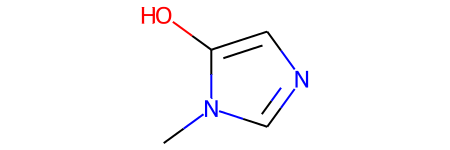

molecule

SMILES: Cn1cncc1O

Num heavy atoms: 7

定義輔助函式

這些輔助函式將有助於將 SMILES 轉換為圖形,並將圖形轉換為分子物件。

表示分子圖形。分子可以自然地表示為無向圖 G = (V, E),其中 V 是一組頂點(原子),而 E 是一組邊(鍵)。對於此實作,每個圖形(分子)將表示為鄰接張量 A,該張量編碼原子對的存在/不存在,並使用單熱編碼鍵類型延伸額外維度,以及特徵張量 H,該張量針對每個原子,使用單熱編碼其原子類型。請注意,由於氫原子可以由 RDKit 推斷,為了更容易建模,A 和 H 中排除了氫原子。

atom_mapping = {

"C": 0,

0: "C",

"N": 1,

1: "N",

"O": 2,

2: "O",

"F": 3,

3: "F",

}

bond_mapping = {

"SINGLE": 0,

0: Chem.BondType.SINGLE,

"DOUBLE": 1,

1: Chem.BondType.DOUBLE,

"TRIPLE": 2,

2: Chem.BondType.TRIPLE,

"AROMATIC": 3,

3: Chem.BondType.AROMATIC,

}

NUM_ATOMS = 9 # Maximum number of atoms

ATOM_DIM = 4 + 1 # Number of atom types

BOND_DIM = 4 + 1 # Number of bond types

LATENT_DIM = 64 # Size of the latent space

def smiles_to_graph(smiles):

# Converts SMILES to molecule object

molecule = Chem.MolFromSmiles(smiles)

# Initialize adjacency and feature tensor

adjacency = np.zeros((BOND_DIM, NUM_ATOMS, NUM_ATOMS), "float32")

features = np.zeros((NUM_ATOMS, ATOM_DIM), "float32")

# loop over each atom in molecule

for atom in molecule.GetAtoms():

i = atom.GetIdx()

atom_type = atom_mapping[atom.GetSymbol()]

features[i] = np.eye(ATOM_DIM)[atom_type]

# loop over one-hop neighbors

for neighbor in atom.GetNeighbors():

j = neighbor.GetIdx()

bond = molecule.GetBondBetweenAtoms(i, j)

bond_type_idx = bond_mapping[bond.GetBondType().name]

adjacency[bond_type_idx, [i, j], [j, i]] = 1

# Where no bond, add 1 to last channel (indicating "non-bond")

# Notice: channels-first

adjacency[-1, np.sum(adjacency, axis=0) == 0] = 1

# Where no atom, add 1 to last column (indicating "non-atom")

features[np.where(np.sum(features, axis=1) == 0)[0], -1] = 1

return adjacency, features

def graph_to_molecule(graph):

# Unpack graph

adjacency, features = graph

# RWMol is a molecule object intended to be edited

molecule = Chem.RWMol()

# Remove "no atoms" & atoms with no bonds

keep_idx = np.where(

(np.argmax(features, axis=1) != ATOM_DIM - 1)

& (np.sum(adjacency[:-1], axis=(0, 1)) != 0)

)[0]

features = features[keep_idx]

adjacency = adjacency[:, keep_idx, :][:, :, keep_idx]

# Add atoms to molecule

for atom_type_idx in np.argmax(features, axis=1):

atom = Chem.Atom(atom_mapping[atom_type_idx])

_ = molecule.AddAtom(atom)

# Add bonds between atoms in molecule; based on the upper triangles

# of the [symmetric] adjacency tensor

(bonds_ij, atoms_i, atoms_j) = np.where(np.triu(adjacency) == 1)

for (bond_ij, atom_i, atom_j) in zip(bonds_ij, atoms_i, atoms_j):

if atom_i == atom_j or bond_ij == BOND_DIM - 1:

continue

bond_type = bond_mapping[bond_ij]

molecule.AddBond(int(atom_i), int(atom_j), bond_type)

# Sanitize the molecule; for more information on sanitization, see

# https://www.rdkit.org/docs/RDKit_Book.html#molecular-sanitization

flag = Chem.SanitizeMol(molecule, catchErrors=True)

# Let's be strict. If sanitization fails, return None

if flag != Chem.SanitizeFlags.SANITIZE_NONE:

return None

return molecule

# Test helper functions

graph_to_molecule(smiles_to_graph(smiles))

生成訓練集

為了節省訓練時間,我們只會使用 QM9 資料集的十分之一。

adjacency_tensor, feature_tensor = [], []

for smiles in data[::10]:

adjacency, features = smiles_to_graph(smiles)

adjacency_tensor.append(adjacency)

feature_tensor.append(features)

adjacency_tensor = np.array(adjacency_tensor)

feature_tensor = np.array(feature_tensor)

print("adjacency_tensor.shape =", adjacency_tensor.shape)

print("feature_tensor.shape =", feature_tensor.shape)

adjacency_tensor.shape = (13389, 5, 9, 9)

feature_tensor.shape = (13389, 9, 5)

模型

這個想法是透過 WGAN-GP 實作一個生成器網路和一個判別器網路,這將產生一個可以生成小型新穎分子(小型圖形)的生成器網路。

生成器網路需要能夠將向量 z(對於批次中的每個範例)對應到 3-D 鄰接張量 (A) 和 2-D 特徵張量 (H)。為此,z 將首先透過完全連接的網路傳遞,該網路的輸出將進一步透過兩個獨立的完全連接的網路傳遞。這兩個完全連接的網路中的每一個都將輸出(對於批次中的每個範例)一個經過 tanh 啟動的向量,然後進行重塑和 softmax 以匹配多維鄰接/特徵張量的形式。

由於判別器網路將接收來自生成器或來自訓練集的圖形 (A, H) 作為輸入,我們需要實作圖形卷積層,這允許我們對圖形進行操作。這表示判別器網路的輸入將首先通過圖形卷積層,然後是平均池化層,最後是幾個完全連接的層。最終輸出應該是一個純量(對於批次中的每個範例),指示相關輸入的「真實性」(在這種情況下是「假」或「真實」分子)。

圖形產生器

def GraphGenerator(

dense_units, dropout_rate, latent_dim, adjacency_shape, feature_shape,

):

z = keras.layers.Input(shape=(LATENT_DIM,))

# Propagate through one or more densely connected layers

x = z

for units in dense_units:

x = keras.layers.Dense(units, activation="tanh")(x)

x = keras.layers.Dropout(dropout_rate)(x)

# Map outputs of previous layer (x) to [continuous] adjacency tensors (x_adjacency)

x_adjacency = keras.layers.Dense(tf.math.reduce_prod(adjacency_shape))(x)

x_adjacency = keras.layers.Reshape(adjacency_shape)(x_adjacency)

# Symmetrify tensors in the last two dimensions

x_adjacency = (x_adjacency + tf.transpose(x_adjacency, (0, 1, 3, 2))) / 2

x_adjacency = keras.layers.Softmax(axis=1)(x_adjacency)

# Map outputs of previous layer (x) to [continuous] feature tensors (x_features)

x_features = keras.layers.Dense(tf.math.reduce_prod(feature_shape))(x)

x_features = keras.layers.Reshape(feature_shape)(x_features)

x_features = keras.layers.Softmax(axis=2)(x_features)

return keras.Model(inputs=z, outputs=[x_adjacency, x_features], name="Generator")

generator = GraphGenerator(

dense_units=[128, 256, 512],

dropout_rate=0.2,

latent_dim=LATENT_DIM,

adjacency_shape=(BOND_DIM, NUM_ATOMS, NUM_ATOMS),

feature_shape=(NUM_ATOMS, ATOM_DIM),

)

generator.summary()

Model: "Generator"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 64)] 0

__________________________________________________________________________________________________

dense (Dense) (None, 128) 8320 input_1[0][0]

__________________________________________________________________________________________________

dropout (Dropout) (None, 128) 0 dense[0][0]

__________________________________________________________________________________________________

dense_1 (Dense) (None, 256) 33024 dropout[0][0]

__________________________________________________________________________________________________

dropout_1 (Dropout) (None, 256) 0 dense_1[0][0]

__________________________________________________________________________________________________

dense_2 (Dense) (None, 512) 131584 dropout_1[0][0]

__________________________________________________________________________________________________

dropout_2 (Dropout) (None, 512) 0 dense_2[0][0]

__________________________________________________________________________________________________

dense_3 (Dense) (None, 405) 207765 dropout_2[0][0]

__________________________________________________________________________________________________

reshape (Reshape) (None, 5, 9, 9) 0 dense_3[0][0]

__________________________________________________________________________________________________

tf.compat.v1.transpose (TFOpLam (None, 5, 9, 9) 0 reshape[0][0]

__________________________________________________________________________________________________

tf.__operators__.add (TFOpLambd (None, 5, 9, 9) 0 reshape[0][0]

tf.compat.v1.transpose[0][0]

__________________________________________________________________________________________________

dense_4 (Dense) (None, 45) 23085 dropout_2[0][0]

__________________________________________________________________________________________________

tf.math.truediv (TFOpLambda) (None, 5, 9, 9) 0 tf.__operators__.add[0][0]

__________________________________________________________________________________________________

reshape_1 (Reshape) (None, 9, 5) 0 dense_4[0][0]

__________________________________________________________________________________________________

softmax (Softmax) (None, 5, 9, 9) 0 tf.math.truediv[0][0]

__________________________________________________________________________________________________

softmax_1 (Softmax) (None, 9, 5) 0 reshape_1[0][0]

==================================================================================================

Total params: 403,778

Trainable params: 403,778

Non-trainable params: 0

__________________________________________________________________________________________________

圖形判別器

圖形卷積層。關係圖形卷積層實作非線性轉換的鄰域聚合。我們可以將這些層定義如下

H^{l+1} = σ(D^{-1} @ A @ H^{l+1} @ W^{l})

其中 σ 表示非線性轉換(通常是 ReLU 啟動),A 是鄰接張量,H^{l} 是第 l 層的特徵張量,D^{-1} 是 A 的反向對角度張量,而 W^{l} 是第 l 層的可訓練權重張量。具體而言,對於每個鍵類型(關係),度張量在對角線上表示連接到每個原子的鍵的數量。請注意,在本教學中省略了 D^{-1},原因有二:(1) 不清楚如何將此歸一化應用於(由產生器生成的)連續鄰接張量,以及 (2) 沒有歸一化的 WGAN 的效能似乎可以正常運作。此外,與 原始論文相比,沒有定義自循環,因為我們不希望訓練產生器來預測「自結合」。

class RelationalGraphConvLayer(keras.layers.Layer):

def __init__(

self,

units=128,

activation="relu",

use_bias=False,

kernel_initializer="glorot_uniform",

bias_initializer="zeros",

kernel_regularizer=None,

bias_regularizer=None,

**kwargs

):

super().__init__(**kwargs)

self.units = units

self.activation = keras.activations.get(activation)

self.use_bias = use_bias

self.kernel_initializer = keras.initializers.get(kernel_initializer)

self.bias_initializer = keras.initializers.get(bias_initializer)

self.kernel_regularizer = keras.regularizers.get(kernel_regularizer)

self.bias_regularizer = keras.regularizers.get(bias_regularizer)

def build(self, input_shape):

bond_dim = input_shape[0][1]

atom_dim = input_shape[1][2]

self.kernel = self.add_weight(

shape=(bond_dim, atom_dim, self.units),

initializer=self.kernel_initializer,

regularizer=self.kernel_regularizer,

trainable=True,

name="W",

dtype=tf.float32,

)

if self.use_bias:

self.bias = self.add_weight(

shape=(bond_dim, 1, self.units),

initializer=self.bias_initializer,

regularizer=self.bias_regularizer,

trainable=True,

name="b",

dtype=tf.float32,

)

self.built = True

def call(self, inputs, training=False):

adjacency, features = inputs

# Aggregate information from neighbors

x = tf.matmul(adjacency, features[:, None, :, :])

# Apply linear transformation

x = tf.matmul(x, self.kernel)

if self.use_bias:

x += self.bias

# Reduce bond types dim

x_reduced = tf.reduce_sum(x, axis=1)

# Apply non-linear transformation

return self.activation(x_reduced)

def GraphDiscriminator(

gconv_units, dense_units, dropout_rate, adjacency_shape, feature_shape

):

adjacency = keras.layers.Input(shape=adjacency_shape)

features = keras.layers.Input(shape=feature_shape)

# Propagate through one or more graph convolutional layers

features_transformed = features

for units in gconv_units:

features_transformed = RelationalGraphConvLayer(units)(

[adjacency, features_transformed]

)

# Reduce 2-D representation of molecule to 1-D

x = keras.layers.GlobalAveragePooling1D()(features_transformed)

# Propagate through one or more densely connected layers

for units in dense_units:

x = keras.layers.Dense(units, activation="relu")(x)

x = keras.layers.Dropout(dropout_rate)(x)

# For each molecule, output a single scalar value expressing the

# "realness" of the inputted molecule

x_out = keras.layers.Dense(1, dtype="float32")(x)

return keras.Model(inputs=[adjacency, features], outputs=x_out)

discriminator = GraphDiscriminator(

gconv_units=[128, 128, 128, 128],

dense_units=[512, 512],

dropout_rate=0.2,

adjacency_shape=(BOND_DIM, NUM_ATOMS, NUM_ATOMS),

feature_shape=(NUM_ATOMS, ATOM_DIM),

)

discriminator.summary()

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_2 (InputLayer) [(None, 5, 9, 9)] 0

__________________________________________________________________________________________________

input_3 (InputLayer) [(None, 9, 5)] 0

__________________________________________________________________________________________________

relational_graph_conv_layer (Re (None, 9, 128) 3200 input_2[0][0]

input_3[0][0]

__________________________________________________________________________________________________

relational_graph_conv_layer_1 ( (None, 9, 128) 81920 input_2[0][0]

relational_graph_conv_layer[0][0]

__________________________________________________________________________________________________

relational_graph_conv_layer_2 ( (None, 9, 128) 81920 input_2[0][0]

relational_graph_conv_layer_1[0][

__________________________________________________________________________________________________

relational_graph_conv_layer_3 ( (None, 9, 128) 81920 input_2[0][0]

relational_graph_conv_layer_2[0][

__________________________________________________________________________________________________

global_average_pooling1d (Globa (None, 128) 0 relational_graph_conv_layer_3[0][

__________________________________________________________________________________________________

dense_5 (Dense) (None, 512) 66048 global_average_pooling1d[0][0]

__________________________________________________________________________________________________

dropout_3 (Dropout) (None, 512) 0 dense_5[0][0]

__________________________________________________________________________________________________

dense_6 (Dense) (None, 512) 262656 dropout_3[0][0]

__________________________________________________________________________________________________

dropout_4 (Dropout) (None, 512) 0 dense_6[0][0]

__________________________________________________________________________________________________

dense_7 (Dense) (None, 1) 513 dropout_4[0][0]

==================================================================================================

Total params: 578,177

Trainable params: 578,177

Non-trainable params: 0

__________________________________________________________________________________________________

WGAN-GP

class GraphWGAN(keras.Model):

def __init__(

self,

generator,

discriminator,

discriminator_steps=1,

generator_steps=1,

gp_weight=10,

**kwargs

):

super().__init__(**kwargs)

self.generator = generator

self.discriminator = discriminator

self.discriminator_steps = discriminator_steps

self.generator_steps = generator_steps

self.gp_weight = gp_weight

self.latent_dim = self.generator.input_shape[-1]

def compile(self, optimizer_generator, optimizer_discriminator, **kwargs):

super().compile(**kwargs)

self.optimizer_generator = optimizer_generator

self.optimizer_discriminator = optimizer_discriminator

self.metric_generator = keras.metrics.Mean(name="loss_gen")

self.metric_discriminator = keras.metrics.Mean(name="loss_dis")

def train_step(self, inputs):

if isinstance(inputs[0], tuple):

inputs = inputs[0]

graph_real = inputs

self.batch_size = tf.shape(inputs[0])[0]

# Train the discriminator for one or more steps

for _ in range(self.discriminator_steps):

z = tf.random.normal((self.batch_size, self.latent_dim))

with tf.GradientTape() as tape:

graph_generated = self.generator(z, training=True)

loss = self._loss_discriminator(graph_real, graph_generated)

grads = tape.gradient(loss, self.discriminator.trainable_weights)

self.optimizer_discriminator.apply_gradients(

zip(grads, self.discriminator.trainable_weights)

)

self.metric_discriminator.update_state(loss)

# Train the generator for one or more steps

for _ in range(self.generator_steps):

z = tf.random.normal((self.batch_size, self.latent_dim))

with tf.GradientTape() as tape:

graph_generated = self.generator(z, training=True)

loss = self._loss_generator(graph_generated)

grads = tape.gradient(loss, self.generator.trainable_weights)

self.optimizer_generator.apply_gradients(

zip(grads, self.generator.trainable_weights)

)

self.metric_generator.update_state(loss)

return {m.name: m.result() for m in self.metrics}

def _loss_discriminator(self, graph_real, graph_generated):

logits_real = self.discriminator(graph_real, training=True)

logits_generated = self.discriminator(graph_generated, training=True)

loss = tf.reduce_mean(logits_generated) - tf.reduce_mean(logits_real)

loss_gp = self._gradient_penalty(graph_real, graph_generated)

return loss + loss_gp * self.gp_weight

def _loss_generator(self, graph_generated):

logits_generated = self.discriminator(graph_generated, training=True)

return -tf.reduce_mean(logits_generated)

def _gradient_penalty(self, graph_real, graph_generated):

# Unpack graphs

adjacency_real, features_real = graph_real

adjacency_generated, features_generated = graph_generated

# Generate interpolated graphs (adjacency_interp and features_interp)

alpha = tf.random.uniform([self.batch_size])

alpha = tf.reshape(alpha, (self.batch_size, 1, 1, 1))

adjacency_interp = (adjacency_real * alpha) + (1 - alpha) * adjacency_generated

alpha = tf.reshape(alpha, (self.batch_size, 1, 1))

features_interp = (features_real * alpha) + (1 - alpha) * features_generated

# Compute the logits of interpolated graphs

with tf.GradientTape() as tape:

tape.watch(adjacency_interp)

tape.watch(features_interp)

logits = self.discriminator(

[adjacency_interp, features_interp], training=True

)

# Compute the gradients with respect to the interpolated graphs

grads = tape.gradient(logits, [adjacency_interp, features_interp])

# Compute the gradient penalty

grads_adjacency_penalty = (1 - tf.norm(grads[0], axis=1)) ** 2

grads_features_penalty = (1 - tf.norm(grads[1], axis=2)) ** 2

return tf.reduce_mean(

tf.reduce_mean(grads_adjacency_penalty, axis=(-2, -1))

+ tf.reduce_mean(grads_features_penalty, axis=(-1))

)

訓練模型

為了節省時間(如果在 CPU 上執行),我們只會訓練模型 10 個 epoch。

wgan = GraphWGAN(generator, discriminator, discriminator_steps=1)

wgan.compile(

optimizer_generator=keras.optimizers.Adam(5e-4),

optimizer_discriminator=keras.optimizers.Adam(5e-4),

)

wgan.fit([adjacency_tensor, feature_tensor], epochs=10, batch_size=16)

Epoch 1/10

837/837 [==============================] - 197s 226ms/step - loss_gen: 2.4626 - loss_dis: -4.3158

Epoch 2/10

837/837 [==============================] - 188s 225ms/step - loss_gen: 1.2832 - loss_dis: -1.3941

Epoch 3/10

837/837 [==============================] - 199s 237ms/step - loss_gen: 0.6742 - loss_dis: -1.2663

Epoch 4/10

837/837 [==============================] - 187s 224ms/step - loss_gen: 0.5090 - loss_dis: -1.6628

Epoch 5/10

837/837 [==============================] - 187s 223ms/step - loss_gen: 0.3686 - loss_dis: -1.4759

Epoch 6/10

837/837 [==============================] - 199s 237ms/step - loss_gen: 0.6925 - loss_dis: -1.5122

Epoch 7/10

837/837 [==============================] - 194s 232ms/step - loss_gen: 0.3966 - loss_dis: -1.5041

Epoch 8/10

837/837 [==============================] - 195s 233ms/step - loss_gen: 0.3595 - loss_dis: -1.6277

Epoch 9/10

837/837 [==============================] - 194s 232ms/step - loss_gen: 0.5862 - loss_dis: -1.7277

Epoch 10/10

837/837 [==============================] - 185s 221ms/step - loss_gen: -0.1642 - loss_dis: -1.5273

<keras.callbacks.History at 0x7ff8daed3a90>

使用產生器取樣新穎分子

def sample(generator, batch_size):

z = tf.random.normal((batch_size, LATENT_DIM))

graph = generator.predict(z)

# obtain one-hot encoded adjacency tensor

adjacency = tf.argmax(graph[0], axis=1)

adjacency = tf.one_hot(adjacency, depth=BOND_DIM, axis=1)

# Remove potential self-loops from adjacency

adjacency = tf.linalg.set_diag(adjacency, tf.zeros(tf.shape(adjacency)[:-1]))

# obtain one-hot encoded feature tensor

features = tf.argmax(graph[1], axis=2)

features = tf.one_hot(features, depth=ATOM_DIM, axis=2)

return [

graph_to_molecule([adjacency[i].numpy(), features[i].numpy()])

for i in range(batch_size)

]

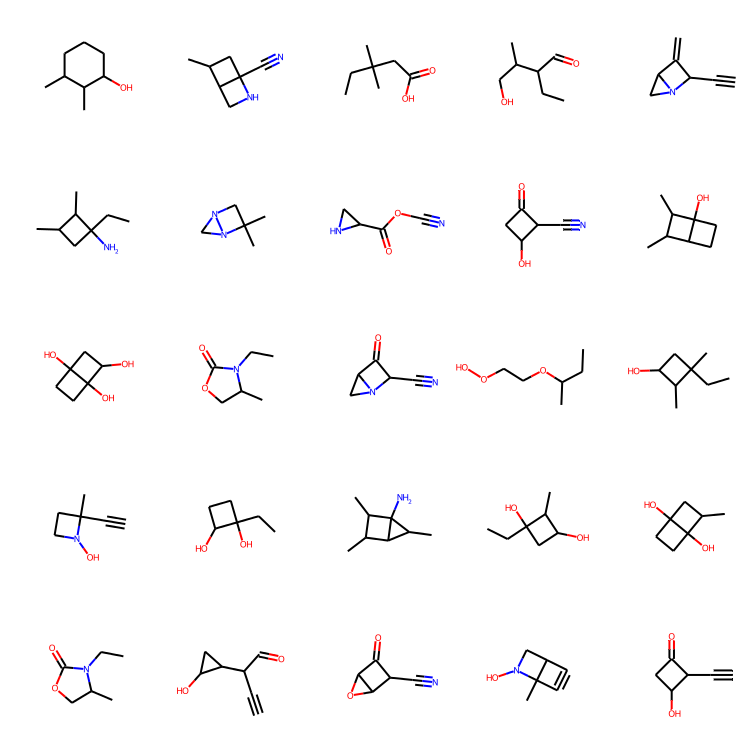

molecules = sample(wgan.generator, batch_size=48)

MolsToGridImage(

[m for m in molecules if m is not None][:25], molsPerRow=5, subImgSize=(150, 150)

)

結論想法

檢查結果。訓練十個 epoch 似乎足以生成一些看起來不錯的分子!請注意,與 MolGAN 論文相比,本教學中產生的分子獨特性似乎非常高,這太棒了!

我們學到的東西和展望。在本教學中,我們成功實作了分子圖形的生成模型,這使我們能夠生成新穎分子。未來,實作可以修改現有分子的生成模型(例如,為了優化現有分子的溶解度或蛋白質結合)將會很有趣。但是,為此可能需要重建損失,這很難實作,因為沒有簡單且明顯的方法來計算兩個分子圖形之間的相似性。

HuggingFace 上提供的範例

| 已訓練模型 | 示範 |

|---|---|

|

|