使用前饋-前饋演算法進行圖像分類

作者: Suvaditya Mukherjee

建立日期 2023/01/08

上次修改日期 2024/09/17

描述: 使用前饋-前饋演算法訓練密集層模型。

簡介

以下範例探討如何使用前饋-前饋演算法進行訓練,而不是傳統使用的反向傳播方法,如 Hinton 在 前饋-前饋演算法:一些初步研究 (2022) 中提出的。

此概念的靈感來自於對 波茲曼機 背後的理解。反向傳播涉及透過成本函數計算實際輸出和預測輸出之間的差異,以調整網路權重。另一方面,FF 演算法提出了神經元的類比,這些神經元在看到某個圖像及其正確對應標籤的特定識別組合時會「興奮」。

此方法從大腦皮層中發生的生物學習過程中獲取了靈感。此方法帶來的一個顯著優勢是,不再需要透過網路進行反向傳播,並且權重更新是層本身本地化的。

由於這仍然是一種實驗性方法,它不會產生最先進的結果。但經過適當的調整,它應該接近相同的結果。透過此範例,我們將檢視一個允許我們在層本身內實作前饋-前饋演算法的過程,而不是依賴全域損失函數和最佳化器的傳統方法。

本教學課程的結構如下

- 執行必要的匯入

- 載入 MNIST 資料集

- 視覺化 MNIST 資料集的隨機樣本

- 定義一個

FFDense層以覆寫call並實作一個執行權重更新的自訂forwardforward方法。 - 定義一個

FFNetwork層以覆寫train_step、predict並實作 2 個自訂函數,用於每個樣本預測和覆蓋標籤 - 將 MNIST 從

NumPy陣列轉換為tf.data.Dataset - 擬合網路

- 視覺化結果

- 對測試樣本執行推論

由於此範例需要使用 keras.layers.Layer 和 keras.models.Model 自訂某些核心函數,請參閱以下資源,了解如何操作的入門知識

設定匯入

import os

os.environ["KERAS_BACKEND"] = "tensorflow"

import tensorflow as tf

import keras

from keras import ops

import numpy as np

import matplotlib.pyplot as plt

from sklearn.metrics import accuracy_score

import random

from tensorflow.compiler.tf2xla.python import xla

載入資料集並視覺化資料

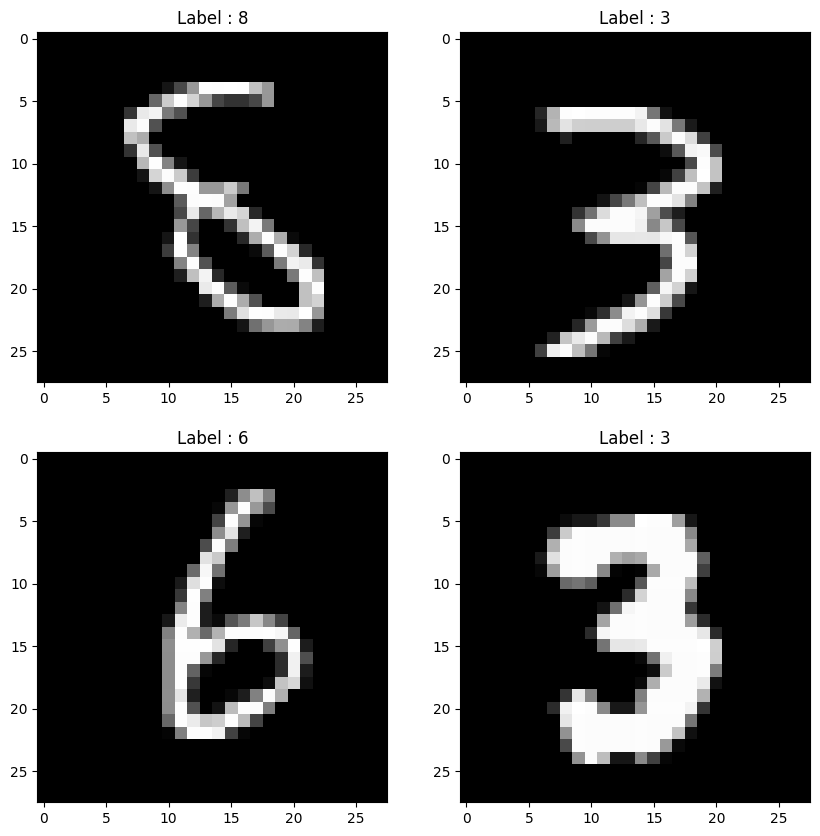

我們使用 keras.datasets.mnist.load_data() 公用程式直接以 NumPy 陣列的形式提取 MNIST 資料集。然後,我們將其安排為訓練和測試分割的形式。

載入資料集後,我們從訓練集中隨機選取 4 個樣本,並使用 matplotlib.pyplot 將其視覺化。

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

print("4 Random Training samples and labels")

idx1, idx2, idx3, idx4 = random.sample(range(0, x_train.shape[0]), 4)

img1 = (x_train[idx1], y_train[idx1])

img2 = (x_train[idx2], y_train[idx2])

img3 = (x_train[idx3], y_train[idx3])

img4 = (x_train[idx4], y_train[idx4])

imgs = [img1, img2, img3, img4]

plt.figure(figsize=(10, 10))

for idx, item in enumerate(imgs):

image, label = item[0], item[1]

plt.subplot(2, 2, idx + 1)

plt.imshow(image, cmap="gray")

plt.title(f"Label : {label}")

plt.show()

4 Random Training samples and labels

定義 FFDense 自訂層

在這個自訂層中,我們有一個基礎 keras.layers.Dense 物件,作為內部的基礎 Dense 層。由於權重更新將在層本身內部發生,我們加入了一個由使用者提供的 keras.optimizers.Optimizer 物件。在這裡,我們使用 Adam 作為我們的最佳化器,並將學習率設定為較高的 0.03。

根據演算法的規範,我們必須設定一個 threshold 參數,用於在每次預測中做出正負判斷。預設值設定為 2.0。由於 epoch 是局部於層本身的,我們也設定了 num_epochs 參數(預設為 50)。

我們覆寫了 call 方法,以便對整個輸入空間執行正規化,然後將其傳遞到基礎 Dense 層,就像正常的 Dense 層呼叫一樣。

我們實作了 Forward-Forward 演算法,該演算法接受兩種輸入張量,分別代表正樣本和負樣本。我們在這裡使用 tf.GradientTape() 撰寫自訂訓練迴圈,在迴圈內,我們透過計算預測值與閾值之間的距離來理解誤差,並取其平均值以獲得 mean_loss 指標來計算每個樣本的損失。

在 tf.GradientTape() 的幫助下,我們計算可訓練基礎 Dense 層的梯度更新,並使用該層的本地最佳化器應用它們。

最後,我們返回 call 的結果,作為正樣本和負樣本的 Dense 結果,同時返回最後的 mean_loss 指標和在特定所有 epoch 運行中的所有損失值。

class FFDense(keras.layers.Layer):

"""

A custom ForwardForward-enabled Dense layer. It has an implementation of the

Forward-Forward network internally for use.

This layer must be used in conjunction with the `FFNetwork` model.

"""

def __init__(

self,

units,

init_optimizer,

loss_metric,

num_epochs=50,

use_bias=True,

kernel_initializer="glorot_uniform",

bias_initializer="zeros",

kernel_regularizer=None,

bias_regularizer=None,

**kwargs,

):

super().__init__(**kwargs)

self.dense = keras.layers.Dense(

units=units,

use_bias=use_bias,

kernel_initializer=kernel_initializer,

bias_initializer=bias_initializer,

kernel_regularizer=kernel_regularizer,

bias_regularizer=bias_regularizer,

)

self.relu = keras.layers.ReLU()

self.optimizer = init_optimizer()

self.loss_metric = loss_metric

self.threshold = 1.5

self.num_epochs = num_epochs

# We perform a normalization step before we run the input through the Dense

# layer.

def call(self, x):

x_norm = ops.norm(x, ord=2, axis=1, keepdims=True)

x_norm = x_norm + 1e-4

x_dir = x / x_norm

res = self.dense(x_dir)

return self.relu(res)

# The Forward-Forward algorithm is below. We first perform the Dense-layer

# operation and then get a Mean Square value for all positive and negative

# samples respectively.

# The custom loss function finds the distance between the Mean-squared

# result and the threshold value we set (a hyperparameter) that will define

# whether the prediction is positive or negative in nature. Once the loss is

# calculated, we get a mean across the entire batch combined and perform a

# gradient calculation and optimization step. This does not technically

# qualify as backpropagation since there is no gradient being

# sent to any previous layer and is completely local in nature.

def forward_forward(self, x_pos, x_neg):

for i in range(self.num_epochs):

with tf.GradientTape() as tape:

g_pos = ops.mean(ops.power(self.call(x_pos), 2), 1)

g_neg = ops.mean(ops.power(self.call(x_neg), 2), 1)

loss = ops.log(

1

+ ops.exp(

ops.concatenate(

[-g_pos + self.threshold, g_neg - self.threshold], 0

)

)

)

mean_loss = ops.cast(ops.mean(loss), dtype="float32")

self.loss_metric.update_state([mean_loss])

gradients = tape.gradient(mean_loss, self.dense.trainable_weights)

self.optimizer.apply_gradients(zip(gradients, self.dense.trainable_weights))

return (

ops.stop_gradient(self.call(x_pos)),

ops.stop_gradient(self.call(x_neg)),

self.loss_metric.result(),

)

定義 FFNetwork 自訂模型

在定義了自訂層之後,我們還需要覆寫 train_step 方法,並定義一個可與我們的 FFDense 層搭配使用的自訂 keras.models.Model。

對於這個演算法,我們必須將標籤「嵌入」到原始圖像上。為此,我們利用 MNIST 圖像的結構,其中左上角的 10 個像素始終為零。我們使用它作為標籤空間,以便在圖像本身中視覺化 one-hot 編碼標籤。此操作由 overlay_y_on_x 函式執行。

我們使用每個樣本的預測函式來分解預測函式,然後由覆寫的 predict() 函式在整個測試集上呼叫。預測在這裡透過測量每個圖像的每一層神經元的激發 (excitation) 來執行。然後將所有層的激發加總,以計算網路範圍的「優良分數」。選擇具有最高「優良分數」的標籤作為樣本預測。

覆寫 train_step 函式,以作為在每層的 epochs 數量內對每層執行訓練的主要控制迴圈。

class FFNetwork(keras.Model):

"""

A [`keras.Model`](/api/models/model#model-class) that supports a `FFDense` network creation. This model

can work for any kind of classification task. It has an internal

implementation with some details specific to the MNIST dataset which can be

changed as per the use-case.

"""

# Since each layer runs gradient-calculation and optimization locally, each

# layer has its own optimizer that we pass. As a standard choice, we pass

# the `Adam` optimizer with a default learning rate of 0.03 as that was

# found to be the best rate after experimentation.

# Loss is tracked using `loss_var` and `loss_count` variables.

def __init__(

self,

dims,

init_layer_optimizer=lambda: keras.optimizers.Adam(learning_rate=0.03),

**kwargs,

):

super().__init__(**kwargs)

self.init_layer_optimizer = init_layer_optimizer

self.loss_var = keras.Variable(0.0, trainable=False, dtype="float32")

self.loss_count = keras.Variable(0.0, trainable=False, dtype="float32")

self.layer_list = [keras.Input(shape=(dims[0],))]

self.metrics_built = False

for d in range(len(dims) - 1):

self.layer_list += [

FFDense(

dims[d + 1],

init_optimizer=self.init_layer_optimizer,

loss_metric=keras.metrics.Mean(),

)

]

# This function makes a dynamic change to the image wherein the labels are

# put on top of the original image (for this example, as MNIST has 10

# unique labels, we take the top-left corner's first 10 pixels). This

# function returns the original data tensor with the first 10 pixels being

# a pixel-based one-hot representation of the labels.

@tf.function(reduce_retracing=True)

def overlay_y_on_x(self, data):

X_sample, y_sample = data

max_sample = ops.amax(X_sample, axis=0, keepdims=True)

max_sample = ops.cast(max_sample, dtype="float64")

X_zeros = ops.zeros([10], dtype="float64")

X_update = xla.dynamic_update_slice(X_zeros, max_sample, [y_sample])

X_sample = xla.dynamic_update_slice(X_sample, X_update, [0])

return X_sample, y_sample

# A custom `predict_one_sample` performs predictions by passing the images

# through the network, measures the results produced by each layer (i.e.

# how high/low the output values are with respect to the set threshold for

# each label) and then simply finding the label with the highest values.

# In such a case, the images are tested for their 'goodness' with all

# labels.

@tf.function(reduce_retracing=True)

def predict_one_sample(self, x):

goodness_per_label = []

x = ops.reshape(x, [ops.shape(x)[0] * ops.shape(x)[1]])

for label in range(10):

h, label = self.overlay_y_on_x(data=(x, label))

h = ops.reshape(h, [-1, ops.shape(h)[0]])

goodness = []

for layer_idx in range(1, len(self.layer_list)):

layer = self.layer_list[layer_idx]

h = layer(h)

goodness += [ops.mean(ops.power(h, 2), 1)]

goodness_per_label += [ops.expand_dims(ops.sum(goodness, keepdims=True), 1)]

goodness_per_label = tf.concat(goodness_per_label, 1)

return ops.cast(ops.argmax(goodness_per_label, 1), dtype="float64")

def predict(self, data):

x = data

preds = list()

preds = ops.vectorized_map(self.predict_one_sample, x)

return np.asarray(preds, dtype=int)

# This custom `train_step` function overrides the internal `train_step`

# implementation. We take all the input image tensors, flatten them and

# subsequently produce positive and negative samples on the images.

# A positive sample is an image that has the right label encoded on it with

# the `overlay_y_on_x` function. A negative sample is an image that has an

# erroneous label present on it.

# With the samples ready, we pass them through each `FFLayer` and perform

# the Forward-Forward computation on it. The returned loss is the final

# loss value over all the layers.

@tf.function(jit_compile=False)

def train_step(self, data):

x, y = data

if not self.metrics_built:

# build metrics to ensure they can be queried without erroring out.

# We can't update the metrics' state, as we would usually do, since

# we do not perform predictions within the train step

for metric in self.metrics:

if hasattr(metric, "build"):

metric.build(y, y)

self.metrics_built = True

# Flatten op

x = ops.reshape(x, [-1, ops.shape(x)[1] * ops.shape(x)[2]])

x_pos, y = ops.vectorized_map(self.overlay_y_on_x, (x, y))

random_y = tf.random.shuffle(y)

x_neg, y = tf.map_fn(self.overlay_y_on_x, (x, random_y))

h_pos, h_neg = x_pos, x_neg

for idx, layer in enumerate(self.layers):

if isinstance(layer, FFDense):

print(f"Training layer {idx+1} now : ")

h_pos, h_neg, loss = layer.forward_forward(h_pos, h_neg)

self.loss_var.assign_add(loss)

self.loss_count.assign_add(1.0)

else:

print(f"Passing layer {idx+1} now : ")

x = layer(x)

mean_res = ops.divide(self.loss_var, self.loss_count)

return {"FinalLoss": mean_res}

將 MNIST NumPy 陣列轉換為 tf.data.Dataset

我們現在對 NumPy 陣列執行一些初步處理,然後將它們轉換為 tf.data.Dataset 格式,以便進行最佳化載入。

x_train = x_train.astype(float) / 255

x_test = x_test.astype(float) / 255

y_train = y_train.astype(int)

y_test = y_test.astype(int)

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train))

test_dataset = tf.data.Dataset.from_tensor_slices((x_test, y_test))

train_dataset = train_dataset.batch(60000)

test_dataset = test_dataset.batch(10000)

擬合網路並視覺化結果

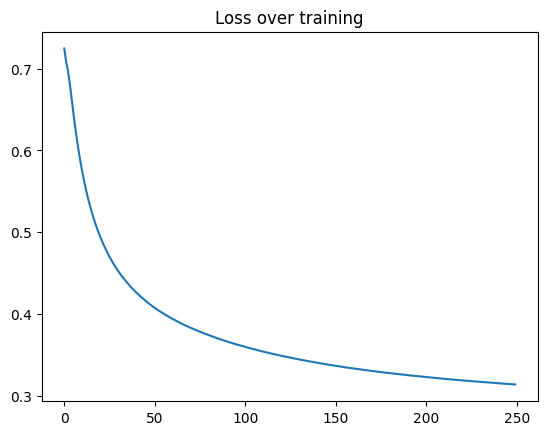

在執行完所有先前的設定後,我們現在要執行 model.fit() 並執行 250 個模型 epoch,這將在每一層執行 50 * 250 個 epoch。我們可以看到繪製的損失曲線,因為每一層都接受了訓練。

model = FFNetwork(dims=[784, 500, 500])

model.compile(

optimizer=keras.optimizers.Adam(learning_rate=0.03),

loss="mse",

jit_compile=False,

metrics=[],

)

epochs = 250

history = model.fit(train_dataset, epochs=epochs)

Epoch 1/250

Training layer 1 now :

Training layer 2 now :

Training layer 1 now :

Training layer 2 now :

1/1 ━━━━━━━━━━━━━━━━━━━━ 90s 90s/step - FinalLoss: 0.7247

Epoch 2/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.7089

Epoch 3/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.6978

Epoch 4/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.6827

Epoch 5/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.6644

Epoch 6/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.6462

Epoch 7/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.6290

Epoch 8/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.6131

Epoch 9/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5986

Epoch 10/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5853

Epoch 11/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5731

Epoch 12/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5621

Epoch 13/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5519

Epoch 14/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5425

Epoch 15/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5338

Epoch 16/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5259

Epoch 17/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5186

Epoch 18/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5117

Epoch 19/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.5052

Epoch 20/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4992

Epoch 21/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4935

Epoch 22/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4883

Epoch 23/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4833

Epoch 24/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4786

Epoch 25/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4741

Epoch 26/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4698

Epoch 27/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4658

Epoch 28/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4620

Epoch 29/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4584

Epoch 30/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4550

Epoch 31/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4517

Epoch 32/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4486

Epoch 33/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4456

Epoch 34/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4429

Epoch 35/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4401

Epoch 36/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4375

Epoch 37/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4350

Epoch 38/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4325

Epoch 39/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4302

Epoch 40/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4279

Epoch 41/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4258

Epoch 42/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4236

Epoch 43/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4216

Epoch 44/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4197

Epoch 45/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4177

Epoch 46/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4159

Epoch 47/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4141

Epoch 48/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4124

Epoch 49/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4107

Epoch 50/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4090

Epoch 51/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4074

Epoch 52/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4059

Epoch 53/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4044

Epoch 54/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.4030

Epoch 55/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4016

Epoch 56/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.4002

Epoch 57/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3988

Epoch 58/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3975

Epoch 59/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3962

Epoch 60/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3950

Epoch 61/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3938

Epoch 62/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3926

Epoch 63/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3914

Epoch 64/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3903

Epoch 65/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3891

Epoch 66/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3880

Epoch 67/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3869

Epoch 68/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3859

Epoch 69/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3849

Epoch 70/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3839

Epoch 71/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3829

Epoch 72/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3819

Epoch 73/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3810

Epoch 74/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3801

Epoch 75/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3792

Epoch 76/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3783

Epoch 77/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3774

Epoch 78/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3765

Epoch 79/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3757

Epoch 80/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3748

Epoch 81/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3740

Epoch 82/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3732

Epoch 83/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3723

Epoch 84/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3715

Epoch 85/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3708

Epoch 86/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3700

Epoch 87/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3692

Epoch 88/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3685

Epoch 89/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3677

Epoch 90/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3670

Epoch 91/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3663

Epoch 92/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3656

Epoch 93/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3649

Epoch 94/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3642

Epoch 95/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3635

Epoch 96/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3629

Epoch 97/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3622

Epoch 98/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3616

Epoch 99/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3610

Epoch 100/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3603

Epoch 101/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3597

Epoch 102/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3591

Epoch 103/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3585

Epoch 104/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3579

Epoch 105/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3573

Epoch 106/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3567

Epoch 107/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3562

Epoch 108/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3556

Epoch 109/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3550

Epoch 110/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3545

Epoch 111/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3539

Epoch 112/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3534

Epoch 113/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3529

Epoch 114/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3524

Epoch 115/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3519

Epoch 116/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3513

Epoch 117/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3508

Epoch 118/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3503

Epoch 119/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3498

Epoch 120/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3493

Epoch 121/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3488

Epoch 122/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3484

Epoch 123/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3479

Epoch 124/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3474

Epoch 125/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3470

Epoch 126/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3465

Epoch 127/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3461

Epoch 128/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3456

Epoch 129/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3452

Epoch 130/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3447

Epoch 131/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3443

Epoch 132/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3439

Epoch 133/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3435

Epoch 134/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3430

Epoch 135/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3426

Epoch 136/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3422

Epoch 137/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3418

Epoch 138/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3414

Epoch 139/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3411

Epoch 140/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3407

Epoch 141/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3403

Epoch 142/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3399

Epoch 143/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3395

Epoch 144/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3391

Epoch 145/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3387

Epoch 146/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3384

Epoch 147/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3380

Epoch 148/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3376

Epoch 149/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3373

Epoch 150/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3369

Epoch 151/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3366

Epoch 152/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3362

Epoch 153/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3359

Epoch 154/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3355

Epoch 155/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3352

Epoch 156/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3349

Epoch 157/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3346

Epoch 158/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3342

Epoch 159/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3339

Epoch 160/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3336

Epoch 161/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3333

Epoch 162/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3330

Epoch 163/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3327

Epoch 164/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3324

Epoch 165/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3321

Epoch 166/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3318

Epoch 167/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3315

Epoch 168/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3312

Epoch 169/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3309

Epoch 170/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3306

Epoch 171/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3303

Epoch 172/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3301

Epoch 173/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3298

Epoch 174/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3295

Epoch 175/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3292

Epoch 176/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3289

Epoch 177/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3287

Epoch 178/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3284

Epoch 179/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3281

Epoch 180/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3279

Epoch 181/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3276

Epoch 182/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3273

Epoch 183/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3271

Epoch 184/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3268

Epoch 185/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3266

Epoch 186/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3263

Epoch 187/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3261

Epoch 188/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3259

Epoch 189/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3256

Epoch 190/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3254

Epoch 191/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3251

Epoch 192/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3249

Epoch 193/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3247

Epoch 194/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3244

Epoch 195/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3242

Epoch 196/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3240

Epoch 197/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3238

Epoch 198/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3235

Epoch 199/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3233

Epoch 200/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3231

Epoch 201/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3228

Epoch 202/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3226

Epoch 203/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3224

Epoch 204/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3222

Epoch 205/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3220

Epoch 206/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3217

Epoch 207/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3215

Epoch 208/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3213

Epoch 209/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3211

Epoch 210/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3209

Epoch 211/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3207

Epoch 212/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3205

Epoch 213/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3203

Epoch 214/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3201

Epoch 215/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3199

Epoch 216/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3197

Epoch 217/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3195

Epoch 218/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3193

Epoch 219/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3191

Epoch 220/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3190

Epoch 221/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3188

Epoch 222/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3186

Epoch 223/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3184

Epoch 224/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3182

Epoch 225/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3180

Epoch 226/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3179

Epoch 227/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3177

Epoch 228/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3175

Epoch 229/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3173

Epoch 230/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3171

Epoch 231/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3170

Epoch 232/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3168

Epoch 233/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3166

Epoch 234/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3164

Epoch 235/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3163

Epoch 236/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3161

Epoch 237/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3159

Epoch 238/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3158

Epoch 239/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3156

Epoch 240/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 41s 41s/step - FinalLoss: 0.3154

Epoch 241/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3152

Epoch 242/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3151

Epoch 243/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3149

Epoch 244/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3148

Epoch 245/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3146

Epoch 246/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3145

Epoch 247/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3143

Epoch 248/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3141

Epoch 249/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3140

Epoch 250/250

1/1 ━━━━━━━━━━━━━━━━━━━━ 40s 40s/step - FinalLoss: 0.3138

執行推論和測試

在將模型訓練到相當大的程度之後,我們現在看看它在測試集上的表現。我們計算準確度分數以仔細了解結果。

preds = model.predict(ops.convert_to_tensor(x_test))

preds = preds.reshape((preds.shape[0], preds.shape[1]))

results = accuracy_score(preds, y_test)

print(f"Test Accuracy score : {results*100}%")

plt.plot(range(len(history.history["FinalLoss"])), history.history["FinalLoss"])

plt.title("Loss over training")

plt.show()

Test Accuracy score : 97.56%

結論

這個範例藉此展示了 Forward-Forward 演算法如何使用 TensorFlow 和 Keras 套件運作。雖然 Hinton 教授在其論文中提出的研究結果目前仍僅限於較小的模型和資料集(如 MNIST 和 Fashion-MNIST),但預計未來論文將出現關於較大模型(如 LLM)的後續結果。

在論文中,Hinton 教授報告了一個 2000 個單元、4 個隱藏層、在 60 個 epoch 上運行的全連接網路的測試準確度誤差為 1.36% 的結果(同時提到反向傳播僅需 20 個 epoch 即可達到類似效能)。將學習率加倍並訓練 40 個 epoch 的另一次運行產生略差的 1.46% 誤差率

目前的範例無法產生最先進的結果。但是,透過適當調整學習率、模型架構(Dense 層中的單元數、核心活化、初始化、正規化等),可以改進結果以符合論文的主張。