用於影像分類的 MixUp 增強

作者: Sayak Paul

建立日期 2021/03/06

上次修改日期 2023/07/24

描述: 使用 mixup 技術進行資料增強,用於影像分類。

簡介

mixup 是一種與領域無關的資料增強技術,由 Zhang 等人於 mixup: Beyond Empirical Risk Minimization 中提出。其使用以下公式實作:

(請注意,lambda 值是介於 [0, 1] 範圍內的值,並且從 Beta 分佈中採樣。)

該技術的命名相當有系統。我們實際上是在混合特徵及其對應的標籤。在實作方面,它很簡單。神經網路容易記憶損毀的標籤。mixup 透過將不同的特徵彼此組合(標籤也是如此)來放鬆此現象,使得網路不會過度自信於特徵及其標籤之間的關係。

當我們不確定為給定的資料集(例如醫學影像資料集)選擇一組增強轉換時,mixup 特別有用。mixup 可以擴展到各種資料模態,例如電腦視覺、自然語言處理、語音等等。

設定

import os

os.environ["KERAS_BACKEND"] = "tensorflow"

import numpy as np

import keras

import matplotlib.pyplot as plt

from keras import layers

# TF imports related to tf.data preprocessing

from tensorflow import data as tf_data

from tensorflow import image as tf_image

from tensorflow.random import gamma as tf_random_gamma

準備資料集

在本範例中,我們將使用 FashionMNIST 資料集。但是,相同的步驟也可以用於其他分類資料集。

(x_train, y_train), (x_test, y_test) = keras.datasets.fashion_mnist.load_data()

x_train = x_train.astype("float32") / 255.0

x_train = np.reshape(x_train, (-1, 28, 28, 1))

y_train = keras.ops.one_hot(y_train, 10)

x_test = x_test.astype("float32") / 255.0

x_test = np.reshape(x_test, (-1, 28, 28, 1))

y_test = keras.ops.one_hot(y_test, 10)

定義超參數

AUTO = tf_data.AUTOTUNE

BATCH_SIZE = 64

EPOCHS = 10

將資料轉換為 TensorFlow Dataset 物件

# Put aside a few samples to create our validation set

val_samples = 2000

x_val, y_val = x_train[:val_samples], y_train[:val_samples]

new_x_train, new_y_train = x_train[val_samples:], y_train[val_samples:]

train_ds_one = (

tf_data.Dataset.from_tensor_slices((new_x_train, new_y_train))

.shuffle(BATCH_SIZE * 100)

.batch(BATCH_SIZE)

)

train_ds_two = (

tf_data.Dataset.from_tensor_slices((new_x_train, new_y_train))

.shuffle(BATCH_SIZE * 100)

.batch(BATCH_SIZE)

)

# Because we will be mixing up the images and their corresponding labels, we will be

# combining two shuffled datasets from the same training data.

train_ds = tf_data.Dataset.zip((train_ds_one, train_ds_two))

val_ds = tf_data.Dataset.from_tensor_slices((x_val, y_val)).batch(BATCH_SIZE)

test_ds = tf_data.Dataset.from_tensor_slices((x_test, y_test)).batch(BATCH_SIZE)

定義 mixup 技術函式

為了執行 mixup 常式,我們使用來自相同資料集的訓練資料建立新的虛擬資料集,並應用從 Beta 分佈中採樣的介於 [0, 1] 範圍內的 lambda 值 — 例如,new_x = lambda * x1 + (1 - lambda) * x2(其中 x1 和 x2 是影像),並且相同的方程式也適用於標籤。

def sample_beta_distribution(size, concentration_0=0.2, concentration_1=0.2):

gamma_1_sample = tf_random_gamma(shape=[size], alpha=concentration_1)

gamma_2_sample = tf_random_gamma(shape=[size], alpha=concentration_0)

return gamma_1_sample / (gamma_1_sample + gamma_2_sample)

def mix_up(ds_one, ds_two, alpha=0.2):

# Unpack two datasets

images_one, labels_one = ds_one

images_two, labels_two = ds_two

batch_size = keras.ops.shape(images_one)[0]

# Sample lambda and reshape it to do the mixup

l = sample_beta_distribution(batch_size, alpha, alpha)

x_l = keras.ops.reshape(l, (batch_size, 1, 1, 1))

y_l = keras.ops.reshape(l, (batch_size, 1))

# Perform mixup on both images and labels by combining a pair of images/labels

# (one from each dataset) into one image/label

images = images_one * x_l + images_two * (1 - x_l)

labels = labels_one * y_l + labels_two * (1 - y_l)

return (images, labels)

請注意,在此,我們將兩個影像組合起來以建立一個影像。理論上,我們可以組合任意多個影像,但這會增加計算成本。在某些情況下,它也可能無助於提升效能。

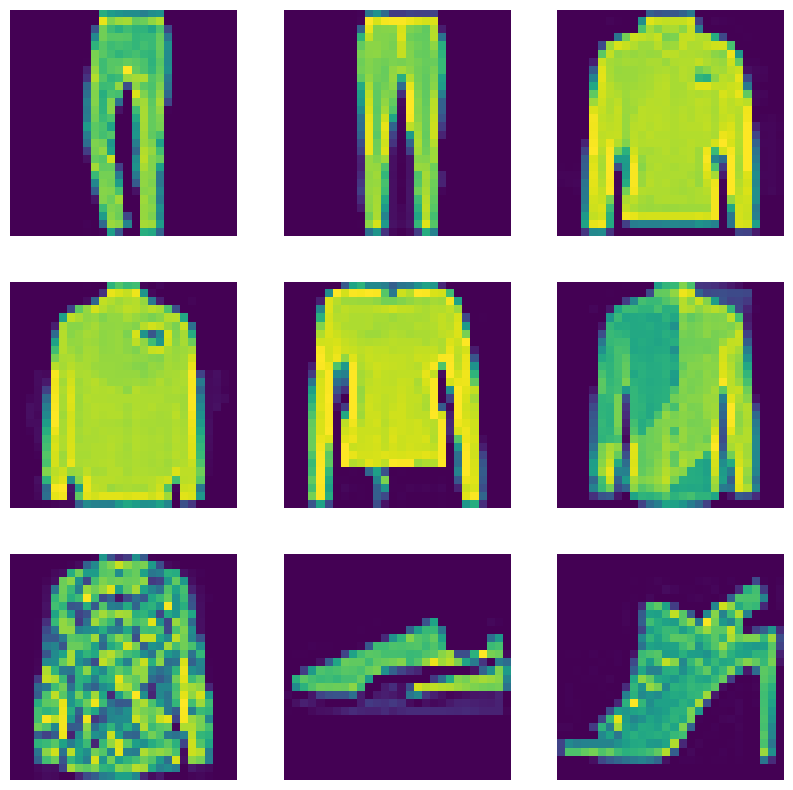

視覺化新的增強資料集

# First create the new dataset using our `mix_up` utility

train_ds_mu = train_ds.map(

lambda ds_one, ds_two: mix_up(ds_one, ds_two, alpha=0.2),

num_parallel_calls=AUTO,

)

# Let's preview 9 samples from the dataset

sample_images, sample_labels = next(iter(train_ds_mu))

plt.figure(figsize=(10, 10))

for i, (image, label) in enumerate(zip(sample_images[:9], sample_labels[:9])):

ax = plt.subplot(3, 3, i + 1)

plt.imshow(image.numpy().squeeze())

print(label.numpy().tolist())

plt.axis("off")

[0.0, 0.9964277148246765, 0.0, 0.0, 0.003572270041331649, 0.0, 0.0, 0.0, 0.0, 0.0]

[0.0, 1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

[0.0, 0.0, 0.0, 0.0, 0.9794676899909973, 0.02053229510784149, 0.0, 0.0, 0.0, 0.0]

[0.0, 0.0, 0.0, 0.0, 0.9536369442939758, 0.0, 0.0, 0.0, 0.04636305570602417, 0.0]

[0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

[0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.7631776928901672, 0.0, 0.0, 0.23682232201099396]

[0.0, 0.0, 0.045958757400512695, 0.0, 0.0, 0.0, 0.9540412425994873, 0.0, 0.0, 0.0]

[0.0, 0.0, 0.0, 0.0, 2.8015051611873787e-08, 0.0, 0.0, 1.0, 0.0, 0.0]

[0.0, 0.0, 0.0, 0.0003173351287841797, 0.0, 0.9996826648712158, 0.0, 0.0, 0.0, 0.0]

模型建構

def get_training_model():

model = keras.Sequential(

[

layers.Input(shape=(28, 28, 1)),

layers.Conv2D(16, (5, 5), activation="relu"),

layers.MaxPooling2D(pool_size=(2, 2)),

layers.Conv2D(32, (5, 5), activation="relu"),

layers.MaxPooling2D(pool_size=(2, 2)),

layers.Dropout(0.2),

layers.GlobalAveragePooling2D(),

layers.Dense(128, activation="relu"),

layers.Dense(10, activation="softmax"),

]

)

return model

為了便於重現,我們序列化淺層網路的初始隨機權重。

initial_model = get_training_model()

initial_model.save_weights("initial_weights.weights.h5")

1. 使用混合資料集訓練模型

model = get_training_model()

model.load_weights("initial_weights.weights.h5")

model.compile(loss="categorical_crossentropy", optimizer="adam", metrics=["accuracy"])

model.fit(train_ds_mu, validation_data=val_ds, epochs=EPOCHS)

_, test_acc = model.evaluate(test_ds)

print("Test accuracy: {:.2f}%".format(test_acc * 100))

Epoch 1/10

62/907 ━[37m━━━━━━━━━━━━━━━━━━━ 2s 3ms/step - accuracy: 0.2518 - loss: 2.2072

WARNING: All log messages before absl::InitializeLog() is called are written to STDERR

I0000 00:00:1699655923.381468 16749 device_compiler.h:187] Compiled cluster using XLA! This line is logged at most once for the lifetime of the process.

907/907 ━━━━━━━━━━━━━━━━━━━━ 13s 9ms/step - accuracy: 0.5335 - loss: 1.4414 - val_accuracy: 0.7635 - val_loss: 0.6678

Epoch 2/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 12s 4ms/step - accuracy: 0.7168 - loss: 0.9688 - val_accuracy: 0.7925 - val_loss: 0.5849

Epoch 3/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 5s 4ms/step - accuracy: 0.7525 - loss: 0.8940 - val_accuracy: 0.8290 - val_loss: 0.5138

Epoch 4/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 4s 3ms/step - accuracy: 0.7742 - loss: 0.8431 - val_accuracy: 0.8360 - val_loss: 0.4726

Epoch 5/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 3ms/step - accuracy: 0.7876 - loss: 0.8095 - val_accuracy: 0.8550 - val_loss: 0.4450

Epoch 6/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 3ms/step - accuracy: 0.8029 - loss: 0.7794 - val_accuracy: 0.8560 - val_loss: 0.4178

Epoch 7/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 2s 3ms/step - accuracy: 0.8039 - loss: 0.7632 - val_accuracy: 0.8600 - val_loss: 0.4056

Epoch 8/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 3ms/step - accuracy: 0.8115 - loss: 0.7465 - val_accuracy: 0.8510 - val_loss: 0.4114

Epoch 9/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 3ms/step - accuracy: 0.8115 - loss: 0.7364 - val_accuracy: 0.8645 - val_loss: 0.3983

Epoch 10/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 3ms/step - accuracy: 0.8182 - loss: 0.7237 - val_accuracy: 0.8630 - val_loss: 0.3735

157/157 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - accuracy: 0.8610 - loss: 0.4030

Test accuracy: 85.82%

2. 在不使用混合資料集的情況下訓練模型

model = get_training_model()

model.load_weights("initial_weights.weights.h5")

model.compile(loss="categorical_crossentropy", optimizer="adam", metrics=["accuracy"])

# Notice that we are NOT using the mixed up dataset here

model.fit(train_ds_one, validation_data=val_ds, epochs=EPOCHS)

_, test_acc = model.evaluate(test_ds)

print("Test accuracy: {:.2f}%".format(test_acc * 100))

Epoch 1/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 8s 6ms/step - accuracy: 0.5690 - loss: 1.1928 - val_accuracy: 0.7585 - val_loss: 0.6519

Epoch 2/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 5s 2ms/step - accuracy: 0.7525 - loss: 0.6484 - val_accuracy: 0.7860 - val_loss: 0.5799

Epoch 3/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 2s 2ms/step - accuracy: 0.7895 - loss: 0.5661 - val_accuracy: 0.8205 - val_loss: 0.5122

Epoch 4/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 2ms/step - accuracy: 0.8148 - loss: 0.5126 - val_accuracy: 0.8415 - val_loss: 0.4375

Epoch 5/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 2ms/step - accuracy: 0.8306 - loss: 0.4636 - val_accuracy: 0.8610 - val_loss: 0.3913

Epoch 6/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 2s 2ms/step - accuracy: 0.8433 - loss: 0.4312 - val_accuracy: 0.8680 - val_loss: 0.3734

Epoch 7/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 2ms/step - accuracy: 0.8544 - loss: 0.4072 - val_accuracy: 0.8750 - val_loss: 0.3606

Epoch 8/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 2ms/step - accuracy: 0.8577 - loss: 0.3913 - val_accuracy: 0.8735 - val_loss: 0.3520

Epoch 9/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 2ms/step - accuracy: 0.8645 - loss: 0.3803 - val_accuracy: 0.8725 - val_loss: 0.3536

Epoch 10/10

907/907 ━━━━━━━━━━━━━━━━━━━━ 3s 3ms/step - accuracy: 0.8686 - loss: 0.3597 - val_accuracy: 0.8745 - val_loss: 0.3395

157/157 ━━━━━━━━━━━━━━━━━━━━ 1s 4ms/step - accuracy: 0.8705 - loss: 0.3672

Test accuracy: 86.92%

鼓勵讀者嘗試在不同領域的不同資料集上使用 mixup,並試驗 lambda 參數。強烈建議您查閱原始論文,作者在論文中提出了幾個關於 mixup 的消融研究,展示了它如何改善泛化,以及展示了將兩個以上影像組合起來以建立單個影像的結果。