使用可組合的全卷積網路進行影像分割

作者: Suvaditya Mukherjee

建立日期 2023/06/16

上次修改日期 2023/12/25

描述: 使用全卷積網路進行影像分割。

簡介

以下範例逐步說明如何在 Oxford-IIIT Pets 資料集上實作全卷積網路進行影像分割。該模型由 Long 等人於論文《用於語義分割的全卷積網路》(Fully Convolutional Networks for Semantic Segmentation, 2014)中提出。當談到電腦視覺時,影像分割是最常見和入門的任務之一,我們將影像分類的問題從每個影像一個標籤延伸到逐像素分類問題。在此範例中,我們將組裝上述全卷積分割架構,該架構能夠執行影像分割。該網路延伸了 VGG 的池化層輸出,以執行上採樣並獲得最終結果。從 VGG19 的第 3、4 和 5 個最大池化層輸出的中間輸出被提取出來,並以不同的級別和因數進行上採樣,以獲得與輸出相同形狀的最終輸出,但在每個位置都有每個像素的類別,而不是像素強度值。不同的中間池化層被提取出來並針對不同版本的網路進行處理。FCN 架構有 3 個不同品質的版本。

- FCN-32S

- FCN-16S

- FCN-8S

所有版本的模型都透過迭代處理所使用主幹的連續中間池化層來得出其輸出。可以從下圖中獲得更好的概念。

|

|---|

| 圖 1:組合架構版本 (來源:論文) |

若要更了解影像分割或尋找更多預訓練模型,請隨時導覽至Hugging Face 影像分割模型頁面,或PyImageSearch 部落格上的語義分割

設定匯入

import os

os.environ["KERAS_BACKEND"] = "tensorflow"

import keras

from keras import ops

import tensorflow as tf

import matplotlib.pyplot as plt

import tensorflow_datasets as tfds

import numpy as np

AUTOTUNE = tf.data.AUTOTUNE

設定筆記本變數的設定

我們設定實驗所需的參數。所選的資料集每個影像總共有 4 個類別,關於分割遮罩。我們也在這個儲存格中設定我們的超參數。

混合精度選項在支援的系統中也可用,以減少負載。這會使大多數張量使用 16 位元浮點值而不是 32 位元浮點值,在不會對計算產生不利影響的地方。這表示,在計算期間,TensorFlow 將使用 16 位元浮點張量來提高速度,但會犧牲精度,同時將值儲存為其原始預設 32 位元浮點形式。

NUM_CLASSES = 4

INPUT_HEIGHT = 224

INPUT_WIDTH = 224

LEARNING_RATE = 1e-3

WEIGHT_DECAY = 1e-4

EPOCHS = 20

BATCH_SIZE = 32

MIXED_PRECISION = True

SHUFFLE = True

# Mixed-precision setting

if MIXED_PRECISION:

policy = keras.mixed_precision.Policy("mixed_float16")

keras.mixed_precision.set_global_policy(policy)

INFO:tensorflow:Mixed precision compatibility check (mixed_float16): OK

Your GPU will likely run quickly with dtype policy mixed_float16 as it has compute capability of at least 7.0. Your GPU: Quadro RTX 5000, compute capability 7.5

載入資料集

我們使用Oxford-IIIT Pets 資料集,其中包含總共 7,349 個樣本及其分割遮罩。我們有 37 個類別,每個類別大約有 200 個樣本。我們的訓練和驗證資料集分別有 3,128 和 552 個樣本。除此之外,我們的測試分割共有 3,669 個樣本。

我們設定一個 batch_size 參數,將我們的樣本批次處理在一起,並使用一個 shuffle 參數來混合我們的樣本。

(train_ds, valid_ds, test_ds) = tfds.load(

"oxford_iiit_pet",

split=["train[:85%]", "train[85%:]", "test"],

batch_size=BATCH_SIZE,

shuffle_files=SHUFFLE,

)

解壓縮和預處理資料集

我們定義一個簡單的函式,其中包括對我們的訓練、驗證和測試資料集執行調整大小。我們也在遮罩上執行相同的處理,以確保兩者在形狀和大小方面對齊。

# Image and Mask Pre-processing

def unpack_resize_data(section):

image = section["image"]

segmentation_mask = section["segmentation_mask"]

resize_layer = keras.layers.Resizing(INPUT_HEIGHT, INPUT_WIDTH)

image = resize_layer(image)

segmentation_mask = resize_layer(segmentation_mask)

return image, segmentation_mask

train_ds = train_ds.map(unpack_resize_data, num_parallel_calls=AUTOTUNE)

valid_ds = valid_ds.map(unpack_resize_data, num_parallel_calls=AUTOTUNE)

test_ds = test_ds.map(unpack_resize_data, num_parallel_calls=AUTOTUNE)

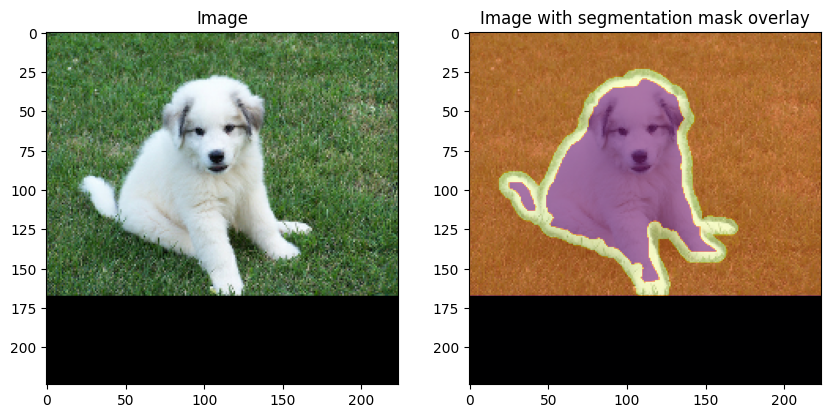

視覺化預處理資料集中的一個隨機樣本

我們視覺化資料集測試分割中的一個隨機樣本的外觀,並在頂部繪製分割遮罩,以查看有效遮罩區域。請注意,我們也對此資料集執行了預處理,這使得影像和遮罩大小相同。

# Select random image and mask. Cast to NumPy array

# for Matplotlib visualization.

images, masks = next(iter(test_ds))

random_idx = keras.random.uniform([], minval=0, maxval=BATCH_SIZE, seed=10)

test_image = images[int(random_idx)].numpy().astype("float")

test_mask = masks[int(random_idx)].numpy().astype("float")

# Overlay segmentation mask on top of image.

fig, ax = plt.subplots(nrows=1, ncols=2, figsize=(10, 5))

ax[0].set_title("Image")

ax[0].imshow(test_image / 255.0)

ax[1].set_title("Image with segmentation mask overlay")

ax[1].imshow(test_image / 255.0)

ax[1].imshow(

test_mask,

cmap="inferno",

alpha=0.6,

)

plt.show()

執行 VGG 特定的預處理

keras.applications.VGG19 需要使用 preprocess_input 函數,該函數會主動執行 Image-net 風格的標準差正規化方案。

def preprocess_data(image, segmentation_mask):

image = keras.applications.vgg19.preprocess_input(image)

return image, segmentation_mask

train_ds = (

train_ds.map(preprocess_data, num_parallel_calls=AUTOTUNE)

.shuffle(buffer_size=1024)

.prefetch(buffer_size=1024)

)

valid_ds = (

valid_ds.map(preprocess_data, num_parallel_calls=AUTOTUNE)

.shuffle(buffer_size=1024)

.prefetch(buffer_size=1024)

)

test_ds = (

test_ds.map(preprocess_data, num_parallel_calls=AUTOTUNE)

.shuffle(buffer_size=1024)

.prefetch(buffer_size=1024)

)

模型定義

全卷積網路 (Fully-Convolutional Network) 擁有簡單的架構,僅由 keras.layers.Conv2D 層、keras.layers.Dense 層和 keras.layers.Dropout 層組成。

|

|---|

| 圖表 2: 通用 FCN 前向傳遞 (來源:論文) |

逐像素預測是透過使用與圖像大小相同的 Softmax 卷積層來實現的,這樣我們就可以進行直接比較。我們可以在網路上找到幾個重要的指標,例如準確度 (Accuracy) 和平均交並比 (Mean-Intersection-over-Union)。

骨幹網路 (VGG-19)

我們使用 VGG-19 網路 作為骨幹網路,因為該論文表明它是此網路最有效的骨幹之一。我們透過使用 keras.models.Model 從網路中提取不同的輸出。 接著,我們在頂部添加層,以建立一個完美模擬圖表 1 的網路。骨幹網路的 keras.layers.Dense 層將根據此處的原始 Caffe 程式碼轉換為 keras.layers.Conv2D 層。所有 3 個網路將共享相同的骨幹網路權重,但會根據它們的擴展而產生不同的結果。我們使骨幹網路不可訓練,以縮短訓練時間。論文中還提到,使網路可訓練並不會產生明顯的效益。

input_layer = keras.Input(shape=(INPUT_HEIGHT, INPUT_WIDTH, 3))

# VGG Model backbone with pre-trained ImageNet weights.

vgg_model = keras.applications.vgg19.VGG19(include_top=True, weights="imagenet")

# Extracting different outputs from same model

fcn_backbone = keras.models.Model(

inputs=vgg_model.layers[1].input,

outputs=[

vgg_model.get_layer(block_name).output

for block_name in ["block3_pool", "block4_pool", "block5_pool"]

],

)

# Setting backbone to be non-trainable

fcn_backbone.trainable = False

x = fcn_backbone(input_layer)

# Converting Dense layers to Conv2D layers

units = [4096, 4096]

dense_convs = []

for filter_idx in range(len(units)):

dense_conv = keras.layers.Conv2D(

filters=units[filter_idx],

kernel_size=(7, 7) if filter_idx == 0 else (1, 1),

strides=(1, 1),

activation="relu",

padding="same",

use_bias=False,

kernel_initializer=keras.initializers.Constant(1.0),,

)

dense_convs.append(dense_conv)

dropout_layer = keras.layers.Dropout(0.5)

dense_convs.append(dropout_layer)

dense_convs = keras.Sequential(dense_convs)

dense_convs.trainable = False

x[-1] = dense_convs(x[-1])

pool3_output, pool4_output, pool5_output = x

FCN-32S

我們擴展最後的輸出,執行 1x1 卷積,並以 32 倍的因子執行 2D 雙線性上採樣,以獲得與輸入大小相同的影像。我們在 keras.layers.Conv2DTranspose 層上使用簡單的 keras.layers.UpSampling2D 層,因為相較於卷積運算,從確定性數學運算中獲得效能優勢。論文中還提到,使上採樣參數可訓練並不會產生效益。論文的原始實驗也使用了上採樣。

# 1x1 convolution to set channels = number of classes

pool5 = keras.layers.Conv2D(

filters=NUM_CLASSES,

kernel_size=(1, 1),

padding="same",

strides=(1, 1),

activation="relu",

)

# Get Softmax outputs for all classes

fcn32s_conv_layer = keras.layers.Conv2D(

filters=NUM_CLASSES,

kernel_size=(1, 1),

activation="softmax",

padding="same",

strides=(1, 1),

)

# Up-sample to original image size

fcn32s_upsampling = keras.layers.UpSampling2D(

size=(32, 32),

data_format=keras.backend.image_data_format(),

interpolation="bilinear",

)

final_fcn32s_pool = pool5(pool5_output)

final_fcn32s_output = fcn32s_conv_layer(final_fcn32s_pool)

final_fcn32s_output = fcn32s_upsampling(final_fcn32s_output)

fcn32s_model = keras.Model(inputs=input_layer, outputs=final_fcn32s_output)

FCN-16S

來自 FCN-32S 的池化輸出被擴展並添加到我們骨幹網路的第 4 層池化輸出中。接著,我們將其上採樣 16 倍,以獲得與輸入大小相同的影像。

# 1x1 convolution to set channels = number of classes

# Followed from the original Caffe implementation

pool4 = keras.layers.Conv2D(

filters=NUM_CLASSES,

kernel_size=(1, 1),

padding="same",

strides=(1, 1),

activation="linear",

kernel_initializer=keras.initializers.Zeros(),

)(pool4_output)

# Intermediate up-sample

pool5 = keras.layers.UpSampling2D(

size=(2, 2),

data_format=keras.backend.image_data_format(),

interpolation="bilinear",

)(final_fcn32s_pool)

# Get Softmax outputs for all classes

fcn16s_conv_layer = keras.layers.Conv2D(

filters=NUM_CLASSES,

kernel_size=(1, 1),

activation="softmax",

padding="same",

strides=(1, 1),

)

# Up-sample to original image size

fcn16s_upsample_layer = keras.layers.UpSampling2D(

size=(16, 16),

data_format=keras.backend.image_data_format(),

interpolation="bilinear",

)

# Add intermediate outputs

final_fcn16s_pool = keras.layers.Add()([pool4, pool5])

final_fcn16s_output = fcn16s_conv_layer(final_fcn16s_pool)

final_fcn16s_output = fcn16s_upsample_layer(final_fcn16s_output)

fcn16s_model = keras.models.Model(inputs=input_layer, outputs=final_fcn16s_output)

FCN-8S

來自 FCN-16S 的池化輸出再次被擴展,並從我們骨幹網路的第 3 層池化輸出中添加。此結果被上採樣 8 倍,以獲得與輸入大小相同的影像。

# 1x1 convolution to set channels = number of classes

# Followed from the original Caffe implementation

pool3 = keras.layers.Conv2D(

filters=NUM_CLASSES,

kernel_size=(1, 1),

padding="same",

strides=(1, 1),

activation="linear",

kernel_initializer=keras.initializers.Zeros(),

)(pool3_output)

# Intermediate up-sample

intermediate_pool_output = keras.layers.UpSampling2D(

size=(2, 2),

data_format=keras.backend.image_data_format(),

interpolation="bilinear",

)(final_fcn16s_pool)

# Get Softmax outputs for all classes

fcn8s_conv_layer = keras.layers.Conv2D(

filters=NUM_CLASSES,

kernel_size=(1, 1),

activation="softmax",

padding="same",

strides=(1, 1),

)

# Up-sample to original image size

fcn8s_upsample_layer = keras.layers.UpSampling2D(

size=(8, 8),

data_format=keras.backend.image_data_format(),

interpolation="bilinear",

)

# Add intermediate outputs

final_fcn8s_pool = keras.layers.Add()([pool3, intermediate_pool_output])

final_fcn8s_output = fcn8s_conv_layer(final_fcn8s_pool)

final_fcn8s_output = fcn8s_upsample_layer(final_fcn8s_output)

fcn8s_model = keras.models.Model(inputs=input_layer, outputs=final_fcn8s_output)

將權重載入骨幹網路

論文和實驗都指出,從骨幹網路中提取最後 2 個全連接 Dense 層的權重,將權重重新塑形以符合我們之前轉換為 keras.layers.Conv2D 的 keras.layers.Dense 層,並將權重設定到其中,可產生更好的結果,並顯著提高 mIOU 效能。

# VGG's last 2 layers

weights1 = vgg_model.get_layer("fc1").get_weights()[0]

weights2 = vgg_model.get_layer("fc2").get_weights()[0]

weights1 = weights1.reshape(7, 7, 512, 4096)

weights2 = weights2.reshape(1, 1, 4096, 4096)

dense_convs.layers[0].set_weights([weights1])

dense_convs.layers[2].set_weights([weights2])

訓練

原始論文談到使用帶動量的 SGD 作為首選的優化器。但實驗中發現,AdamW 在 mIOU 和逐像素準確度方面產生了更好的結果。

FCN-32S

fcn32s_optimizer = keras.optimizers.AdamW(

learning_rate=LEARNING_RATE, weight_decay=WEIGHT_DECAY

)

fcn32s_loss = keras.losses.SparseCategoricalCrossentropy()

# Maintain mIOU and Pixel-wise Accuracy as metrics

fcn32s_model.compile(

optimizer=fcn32s_optimizer,

loss=fcn32s_loss,

metrics=[

keras.metrics.MeanIoU(num_classes=NUM_CLASSES, sparse_y_pred=False),

keras.metrics.SparseCategoricalAccuracy(),

],

)

fcn32s_history = fcn32s_model.fit(train_ds, epochs=EPOCHS, validation_data=valid_ds)

Epoch 1/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 31s 171ms/step - loss: 0.9853 - mean_io_u: 0.3056 - sparse_categorical_accuracy: 0.6242 - val_loss: 0.7911 - val_mean_io_u: 0.4022 - val_sparse_categorical_accuracy: 0.7011

Epoch 2/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 22s 131ms/step - loss: 0.7463 - mean_io_u: 0.3978 - sparse_categorical_accuracy: 0.7100 - val_loss: 0.7162 - val_mean_io_u: 0.3968 - val_sparse_categorical_accuracy: 0.7157

Epoch 3/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 21s 120ms/step - loss: 0.6939 - mean_io_u: 0.4139 - sparse_categorical_accuracy: 0.7255 - val_loss: 0.6714 - val_mean_io_u: 0.4383 - val_sparse_categorical_accuracy: 0.7379

Epoch 4/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 21s 117ms/step - loss: 0.6694 - mean_io_u: 0.4239 - sparse_categorical_accuracy: 0.7339 - val_loss: 0.6715 - val_mean_io_u: 0.4258 - val_sparse_categorical_accuracy: 0.7332

Epoch 5/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 21s 115ms/step - loss: 0.6556 - mean_io_u: 0.4279 - sparse_categorical_accuracy: 0.7382 - val_loss: 0.6271 - val_mean_io_u: 0.4483 - val_sparse_categorical_accuracy: 0.7514

Epoch 6/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 21s 120ms/step - loss: 0.6501 - mean_io_u: 0.4295 - sparse_categorical_accuracy: 0.7394 - val_loss: 0.6390 - val_mean_io_u: 0.4375 - val_sparse_categorical_accuracy: 0.7442

Epoch 7/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 109ms/step - loss: 0.6464 - mean_io_u: 0.4309 - sparse_categorical_accuracy: 0.7402 - val_loss: 0.6143 - val_mean_io_u: 0.4508 - val_sparse_categorical_accuracy: 0.7553

Epoch 8/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 108ms/step - loss: 0.6363 - mean_io_u: 0.4343 - sparse_categorical_accuracy: 0.7444 - val_loss: 0.6143 - val_mean_io_u: 0.4481 - val_sparse_categorical_accuracy: 0.7541

Epoch 9/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 108ms/step - loss: 0.6367 - mean_io_u: 0.4346 - sparse_categorical_accuracy: 0.7445 - val_loss: 0.6222 - val_mean_io_u: 0.4534 - val_sparse_categorical_accuracy: 0.7510

Epoch 10/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 19s 108ms/step - loss: 0.6398 - mean_io_u: 0.4346 - sparse_categorical_accuracy: 0.7426 - val_loss: 0.6123 - val_mean_io_u: 0.4494 - val_sparse_categorical_accuracy: 0.7541

Epoch 11/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 110ms/step - loss: 0.6361 - mean_io_u: 0.4365 - sparse_categorical_accuracy: 0.7439 - val_loss: 0.6310 - val_mean_io_u: 0.4405 - val_sparse_categorical_accuracy: 0.7461

Epoch 12/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 21s 110ms/step - loss: 0.6325 - mean_io_u: 0.4362 - sparse_categorical_accuracy: 0.7454 - val_loss: 0.6155 - val_mean_io_u: 0.4441 - val_sparse_categorical_accuracy: 0.7509

Epoch 13/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 112ms/step - loss: 0.6335 - mean_io_u: 0.4368 - sparse_categorical_accuracy: 0.7452 - val_loss: 0.6153 - val_mean_io_u: 0.4430 - val_sparse_categorical_accuracy: 0.7504

Epoch 14/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 113ms/step - loss: 0.6289 - mean_io_u: 0.4380 - sparse_categorical_accuracy: 0.7466 - val_loss: 0.6357 - val_mean_io_u: 0.4309 - val_sparse_categorical_accuracy: 0.7382

Epoch 15/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 113ms/step - loss: 0.6267 - mean_io_u: 0.4369 - sparse_categorical_accuracy: 0.7474 - val_loss: 0.5974 - val_mean_io_u: 0.4619 - val_sparse_categorical_accuracy: 0.7617

Epoch 16/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 109ms/step - loss: 0.6309 - mean_io_u: 0.4368 - sparse_categorical_accuracy: 0.7458 - val_loss: 0.6071 - val_mean_io_u: 0.4463 - val_sparse_categorical_accuracy: 0.7533

Epoch 17/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 112ms/step - loss: 0.6285 - mean_io_u: 0.4382 - sparse_categorical_accuracy: 0.7465 - val_loss: 0.5979 - val_mean_io_u: 0.4576 - val_sparse_categorical_accuracy: 0.7602

Epoch 18/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 111ms/step - loss: 0.6250 - mean_io_u: 0.4403 - sparse_categorical_accuracy: 0.7479 - val_loss: 0.6121 - val_mean_io_u: 0.4451 - val_sparse_categorical_accuracy: 0.7507

Epoch 19/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 111ms/step - loss: 0.6307 - mean_io_u: 0.4386 - sparse_categorical_accuracy: 0.7454 - val_loss: 0.6010 - val_mean_io_u: 0.4532 - val_sparse_categorical_accuracy: 0.7577

Epoch 20/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 114ms/step - loss: 0.6199 - mean_io_u: 0.4403 - sparse_categorical_accuracy: 0.7505 - val_loss: 0.6180 - val_mean_io_u: 0.4339 - val_sparse_categorical_accuracy: 0.7465

FCN-16S

fcn16s_optimizer = keras.optimizers.AdamW(

learning_rate=LEARNING_RATE, weight_decay=WEIGHT_DECAY

)

fcn16s_loss = keras.losses.SparseCategoricalCrossentropy()

# Maintain mIOU and Pixel-wise Accuracy as metrics

fcn16s_model.compile(

optimizer=fcn16s_optimizer,

loss=fcn16s_loss,

metrics=[

keras.metrics.MeanIoU(num_classes=NUM_CLASSES, sparse_y_pred=False),

keras.metrics.SparseCategoricalAccuracy(),

],

)

fcn16s_history = fcn16s_model.fit(train_ds, epochs=EPOCHS, validation_data=valid_ds)

Epoch 1/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 23s 127ms/step - loss: 6.4519 - mean_io_u_1: 0.3101 - sparse_categorical_accuracy: 0.5649 - val_loss: 5.7052 - val_mean_io_u_1: 0.3842 - val_sparse_categorical_accuracy: 0.6057

Epoch 2/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 19s 110ms/step - loss: 5.2670 - mean_io_u_1: 0.3936 - sparse_categorical_accuracy: 0.6339 - val_loss: 5.8929 - val_mean_io_u_1: 0.3864 - val_sparse_categorical_accuracy: 0.5940

Epoch 3/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 111ms/step - loss: 5.2376 - mean_io_u_1: 0.3945 - sparse_categorical_accuracy: 0.6366 - val_loss: 5.6404 - val_mean_io_u_1: 0.3889 - val_sparse_categorical_accuracy: 0.6079

Epoch 4/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 21s 113ms/step - loss: 5.3014 - mean_io_u_1: 0.3924 - sparse_categorical_accuracy: 0.6323 - val_loss: 5.6516 - val_mean_io_u_1: 0.3874 - val_sparse_categorical_accuracy: 0.6094

Epoch 5/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 112ms/step - loss: 5.3135 - mean_io_u_1: 0.3918 - sparse_categorical_accuracy: 0.6323 - val_loss: 5.6588 - val_mean_io_u_1: 0.3903 - val_sparse_categorical_accuracy: 0.6084

Epoch 6/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 108ms/step - loss: 5.2401 - mean_io_u_1: 0.3938 - sparse_categorical_accuracy: 0.6357 - val_loss: 5.6463 - val_mean_io_u_1: 0.3868 - val_sparse_categorical_accuracy: 0.6097

Epoch 7/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 109ms/step - loss: 5.2277 - mean_io_u_1: 0.3921 - sparse_categorical_accuracy: 0.6371 - val_loss: 5.6272 - val_mean_io_u_1: 0.3796 - val_sparse_categorical_accuracy: 0.6136

Epoch 8/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 112ms/step - loss: 5.2479 - mean_io_u_1: 0.3910 - sparse_categorical_accuracy: 0.6360 - val_loss: 5.6303 - val_mean_io_u_1: 0.3823 - val_sparse_categorical_accuracy: 0.6108

Epoch 9/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 21s 112ms/step - loss: 5.1940 - mean_io_u_1: 0.3913 - sparse_categorical_accuracy: 0.6388 - val_loss: 5.8818 - val_mean_io_u_1: 0.3848 - val_sparse_categorical_accuracy: 0.5912

Epoch 10/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 111ms/step - loss: 5.2457 - mean_io_u_1: 0.3898 - sparse_categorical_accuracy: 0.6358 - val_loss: 5.6423 - val_mean_io_u_1: 0.3880 - val_sparse_categorical_accuracy: 0.6087

Epoch 11/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 110ms/step - loss: 5.1808 - mean_io_u_1: 0.3905 - sparse_categorical_accuracy: 0.6400 - val_loss: 5.6175 - val_mean_io_u_1: 0.3834 - val_sparse_categorical_accuracy: 0.6090

Epoch 12/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 112ms/step - loss: 5.2730 - mean_io_u_1: 0.3907 - sparse_categorical_accuracy: 0.6341 - val_loss: 5.6322 - val_mean_io_u_1: 0.3878 - val_sparse_categorical_accuracy: 0.6109

Epoch 13/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 109ms/step - loss: 5.2501 - mean_io_u_1: 0.3904 - sparse_categorical_accuracy: 0.6359 - val_loss: 5.8711 - val_mean_io_u_1: 0.3859 - val_sparse_categorical_accuracy: 0.5950

Epoch 14/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 107ms/step - loss: 5.2407 - mean_io_u_1: 0.3926 - sparse_categorical_accuracy: 0.6362 - val_loss: 5.6387 - val_mean_io_u_1: 0.3805 - val_sparse_categorical_accuracy: 0.6122

Epoch 15/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 108ms/step - loss: 5.2280 - mean_io_u_1: 0.3909 - sparse_categorical_accuracy: 0.6370 - val_loss: 5.6382 - val_mean_io_u_1: 0.3837 - val_sparse_categorical_accuracy: 0.6112

Epoch 16/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 108ms/step - loss: 5.2232 - mean_io_u_1: 0.3899 - sparse_categorical_accuracy: 0.6369 - val_loss: 5.6285 - val_mean_io_u_1: 0.3818 - val_sparse_categorical_accuracy: 0.6101

Epoch 17/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 107ms/step - loss: 1.4671 - mean_io_u_1: 0.5928 - sparse_categorical_accuracy: 0.8210 - val_loss: 0.7661 - val_mean_io_u_1: 0.6455 - val_sparse_categorical_accuracy: 0.8504

Epoch 18/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 110ms/step - loss: 0.6795 - mean_io_u_1: 0.6508 - sparse_categorical_accuracy: 0.8664 - val_loss: 0.6913 - val_mean_io_u_1: 0.6490 - val_sparse_categorical_accuracy: 0.8562

Epoch 19/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 110ms/step - loss: 0.6498 - mean_io_u_1: 0.6530 - sparse_categorical_accuracy: 0.8663 - val_loss: 0.6834 - val_mean_io_u_1: 0.6559 - val_sparse_categorical_accuracy: 0.8577

Epoch 20/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 110ms/step - loss: 0.6305 - mean_io_u_1: 0.6563 - sparse_categorical_accuracy: 0.8681 - val_loss: 0.6529 - val_mean_io_u_1: 0.6575 - val_sparse_categorical_accuracy: 0.8657

FCN-8S

fcn8s_optimizer = keras.optimizers.AdamW(

learning_rate=LEARNING_RATE, weight_decay=WEIGHT_DECAY

)

fcn8s_loss = keras.losses.SparseCategoricalCrossentropy()

# Maintain mIOU and Pixel-wise Accuracy as metrics

fcn8s_model.compile(

optimizer=fcn8s_optimizer,

loss=fcn8s_loss,

metrics=[

keras.metrics.MeanIoU(num_classes=NUM_CLASSES, sparse_y_pred=False),

keras.metrics.SparseCategoricalAccuracy(),

],

)

fcn8s_history = fcn8s_model.fit(train_ds, epochs=EPOCHS, validation_data=valid_ds)

Epoch 1/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 24s 125ms/step - loss: 8.4168 - mean_io_u_2: 0.3116 - sparse_categorical_accuracy: 0.4237 - val_loss: 7.6113 - val_mean_io_u_2: 0.3540 - val_sparse_categorical_accuracy: 0.4682

Epoch 2/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 110ms/step - loss: 8.1030 - mean_io_u_2: 0.3423 - sparse_categorical_accuracy: 0.4401 - val_loss: 7.7038 - val_mean_io_u_2: 0.3335 - val_sparse_categorical_accuracy: 0.4481

Epoch 3/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 110ms/step - loss: 8.0868 - mean_io_u_2: 0.3433 - sparse_categorical_accuracy: 0.4408 - val_loss: 7.5839 - val_mean_io_u_2: 0.3518 - val_sparse_categorical_accuracy: 0.4722

Epoch 4/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 21s 111ms/step - loss: 8.1508 - mean_io_u_2: 0.3414 - sparse_categorical_accuracy: 0.4365 - val_loss: 7.2391 - val_mean_io_u_2: 0.3519 - val_sparse_categorical_accuracy: 0.4805

Epoch 5/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 112ms/step - loss: 8.1621 - mean_io_u_2: 0.3440 - sparse_categorical_accuracy: 0.4361 - val_loss: 7.2805 - val_mean_io_u_2: 0.3474 - val_sparse_categorical_accuracy: 0.4816

Epoch 6/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 110ms/step - loss: 8.1470 - mean_io_u_2: 0.3412 - sparse_categorical_accuracy: 0.4360 - val_loss: 7.5605 - val_mean_io_u_2: 0.3543 - val_sparse_categorical_accuracy: 0.4736

Epoch 7/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 110ms/step - loss: 8.1464 - mean_io_u_2: 0.3430 - sparse_categorical_accuracy: 0.4368 - val_loss: 7.5442 - val_mean_io_u_2: 0.3542 - val_sparse_categorical_accuracy: 0.4702

Epoch 8/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 108ms/step - loss: 8.0812 - mean_io_u_2: 0.3463 - sparse_categorical_accuracy: 0.4403 - val_loss: 7.5565 - val_mean_io_u_2: 0.3471 - val_sparse_categorical_accuracy: 0.4614

Epoch 9/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 109ms/step - loss: 8.0441 - mean_io_u_2: 0.3463 - sparse_categorical_accuracy: 0.4420 - val_loss: 7.5563 - val_mean_io_u_2: 0.3522 - val_sparse_categorical_accuracy: 0.4734

Epoch 10/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 110ms/step - loss: 8.1385 - mean_io_u_2: 0.3432 - sparse_categorical_accuracy: 0.4363 - val_loss: 7.5236 - val_mean_io_u_2: 0.3506 - val_sparse_categorical_accuracy: 0.4660

Epoch 11/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 111ms/step - loss: 8.1114 - mean_io_u_2: 0.3447 - sparse_categorical_accuracy: 0.4381 - val_loss: 7.2068 - val_mean_io_u_2: 0.3518 - val_sparse_categorical_accuracy: 0.4808

Epoch 12/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 107ms/step - loss: 8.0777 - mean_io_u_2: 0.3451 - sparse_categorical_accuracy: 0.4392 - val_loss: 7.2252 - val_mean_io_u_2: 0.3497 - val_sparse_categorical_accuracy: 0.4815

Epoch 13/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 21s 110ms/step - loss: 8.1355 - mean_io_u_2: 0.3446 - sparse_categorical_accuracy: 0.4366 - val_loss: 7.5587 - val_mean_io_u_2: 0.3500 - val_sparse_categorical_accuracy: 0.4671

Epoch 14/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 20s 107ms/step - loss: 8.1828 - mean_io_u_2: 0.3410 - sparse_categorical_accuracy: 0.4330 - val_loss: 7.2464 - val_mean_io_u_2: 0.3557 - val_sparse_categorical_accuracy: 0.4927

Epoch 15/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 108ms/step - loss: 8.1845 - mean_io_u_2: 0.3432 - sparse_categorical_accuracy: 0.4330 - val_loss: 7.2032 - val_mean_io_u_2: 0.3506 - val_sparse_categorical_accuracy: 0.4805

Epoch 16/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 21s 109ms/step - loss: 8.1183 - mean_io_u_2: 0.3449 - sparse_categorical_accuracy: 0.4374 - val_loss: 7.6210 - val_mean_io_u_2: 0.3460 - val_sparse_categorical_accuracy: 0.4751

Epoch 17/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 21s 111ms/step - loss: 8.1766 - mean_io_u_2: 0.3429 - sparse_categorical_accuracy: 0.4329 - val_loss: 7.5361 - val_mean_io_u_2: 0.3489 - val_sparse_categorical_accuracy: 0.4639

Epoch 18/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 109ms/step - loss: 8.0453 - mean_io_u_2: 0.3442 - sparse_categorical_accuracy: 0.4404 - val_loss: 7.1767 - val_mean_io_u_2: 0.3549 - val_sparse_categorical_accuracy: 0.4839

Epoch 19/20

Corrupt JPEG data: premature end of data segment

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

98/98 [==============================] - 20s 109ms/step - loss: 8.0856 - mean_io_u_2: 0.3449 - sparse_categorical_accuracy: 0.4390 - val_loss: 7.1724 - val_mean_io_u_2: 0.3574 - val_sparse_categorical_accuracy: 0.4878

Epoch 20/20

Corrupt JPEG data: 240 extraneous bytes before marker 0xd9

Corrupt JPEG data: premature end of data segment

98/98 [==============================] - 21s 109ms/step - loss: 8.1378 - mean_io_u_2: 0.3445 - sparse_categorical_accuracy: 0.4358 - val_loss: 7.5449 - val_mean_io_u_2: 0.3521 - val_sparse_categorical_accuracy: 0.4681

視覺化

繪製訓練運行的指標

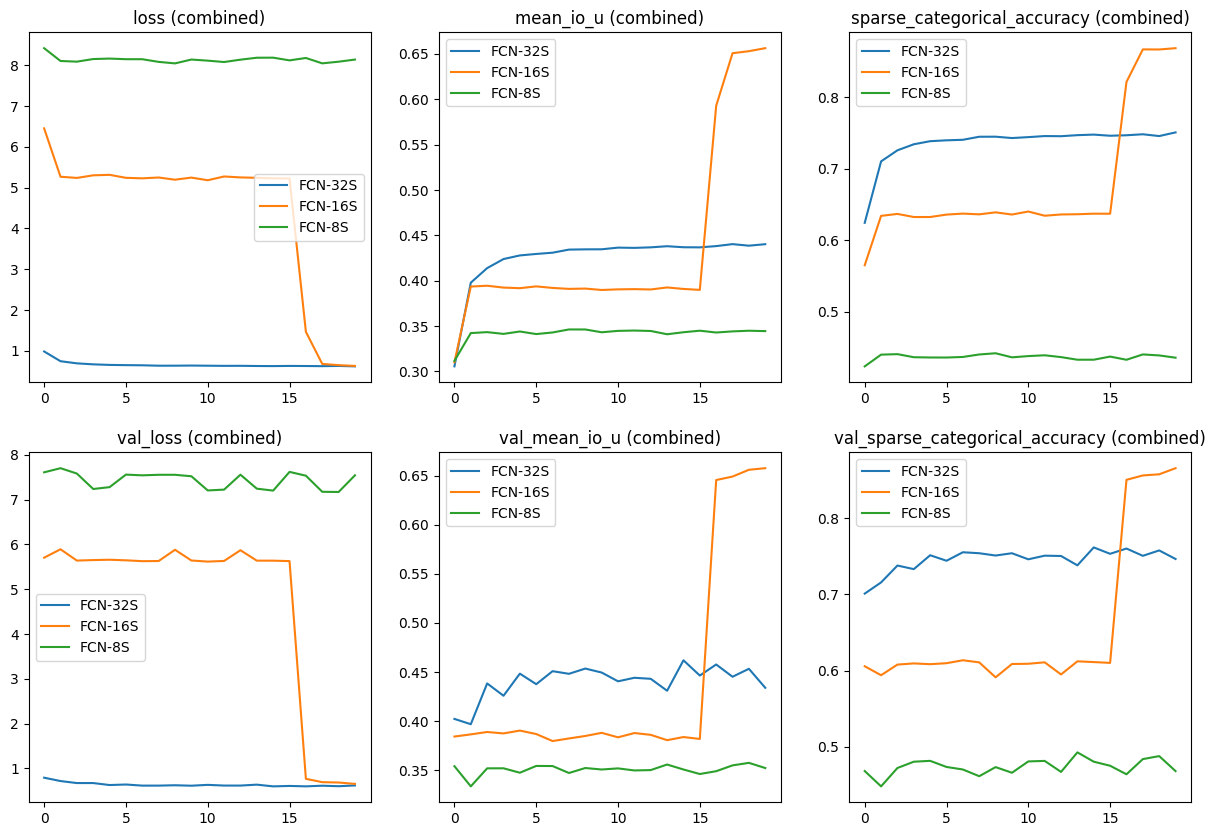

我們透過追蹤準確度、損失和平均 IoU 的訓練和驗證指標,對所有 3 個版本的模型進行比較研究。

total_plots = len(fcn32s_history.history)

cols = total_plots // 2

rows = total_plots // cols

if total_plots % cols != 0:

rows += 1

# Set all history dictionary objects

fcn32s_dict = fcn32s_history.history

fcn16s_dict = fcn16s_history.history

fcn8s_dict = fcn8s_history.history

pos = range(1, total_plots + 1)

plt.figure(figsize=(15, 10))

for i, ((key_32s, value_32s), (key_16s, value_16s), (key_8s, value_8s)) in enumerate(

zip(fcn32s_dict.items(), fcn16s_dict.items(), fcn8s_dict.items())

):

plt.subplot(rows, cols, pos[i])

plt.plot(range(len(value_32s)), value_32s)

plt.plot(range(len(value_16s)), value_16s)

plt.plot(range(len(value_8s)), value_8s)

plt.title(str(key_32s) + " (combined)")

plt.legend(["FCN-32S", "FCN-16S", "FCN-8S"])

plt.show()

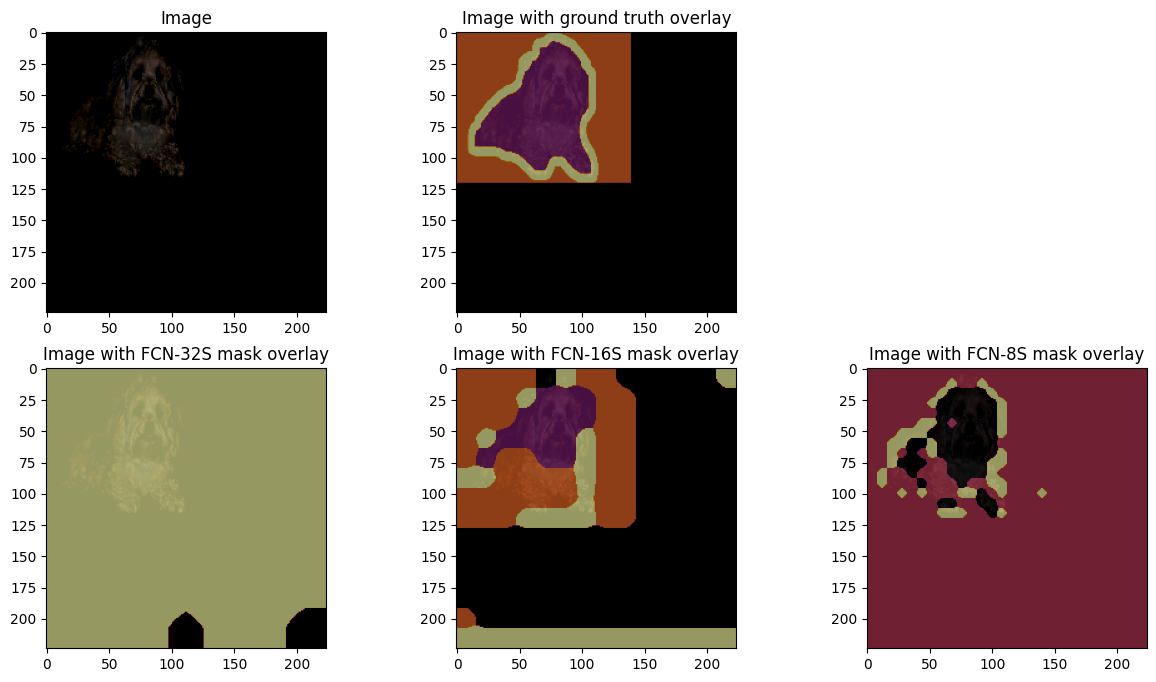

視覺化預測的分割遮罩

為了理解結果並更好地查看它們,我們從測試資料集中隨機選擇一個影像,並對其執行推論,以查看每個模型產生的遮罩。注意:為了獲得更好的結果,必須對模型進行更高 epoch 數的訓練。

images, masks = next(iter(test_ds))

random_idx = keras.random.uniform([], minval=0, maxval=BATCH_SIZE,seed=10)

# Get random test image and mask

test_image = images[int(random_idx)].numpy().astype("float")

test_mask = masks[int(random_idx)].numpy().astype("float")

pred_image = ops.expand_dims(test_image, axis=0)

pred_image = keras.applications.vgg19.preprocess_input(pred_image)

# Perform inference on FCN-32S

pred_mask_32s = fcn32s_model.predict(pred_image, verbose=0).astype("float")

pred_mask_32s = np.argmax(pred_mask_32s, axis=-1)

pred_mask_32s = pred_mask_32s[0, ...]

# Perform inference on FCN-16S

pred_mask_16s = fcn16s_model.predict(pred_image, verbose=0).astype("float")

pred_mask_16s = np.argmax(pred_mask_16s, axis=-1)

pred_mask_16s = pred_mask_16s[0, ...]

# Perform inference on FCN-8S

pred_mask_8s = fcn8s_model.predict(pred_image, verbose=0).astype("float")

pred_mask_8s = np.argmax(pred_mask_8s, axis=-1)

pred_mask_8s = pred_mask_8s[0, ...]

# Plot all results

fig, ax = plt.subplots(nrows=2, ncols=3, figsize=(15, 8))

fig.delaxes(ax[0, 2])

ax[0, 0].set_title("Image")

ax[0, 0].imshow(test_image / 255.0)

ax[0, 1].set_title("Image with ground truth overlay")

ax[0, 1].imshow(test_image / 255.0)

ax[0, 1].imshow(

test_mask,

cmap="inferno",

alpha=0.6,

)

ax[1, 0].set_title("Image with FCN-32S mask overlay")

ax[1, 0].imshow(test_image / 255.0)

ax[1, 0].imshow(pred_mask_32s, cmap="inferno", alpha=0.6)

ax[1, 1].set_title("Image with FCN-16S mask overlay")

ax[1, 1].imshow(test_image / 255.0)

ax[1, 1].imshow(pred_mask_16s, cmap="inferno", alpha=0.6)

ax[1, 2].set_title("Image with FCN-8S mask overlay")

ax[1, 2].imshow(test_image / 255.0)

ax[1, 2].imshow(pred_mask_8s, cmap="inferno", alpha=0.6)

plt.show()

WARNING:matplotlib.image:Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

WARNING:matplotlib.image:Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

WARNING:matplotlib.image:Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

WARNING:matplotlib.image:Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

WARNING:matplotlib.image:Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

結論

全卷積網路是一個非常簡單的網路,在不同基準的影像分割任務中產生了強勁的結果。隨著 注意力機制等更好的機制出現,如 SegFormer 和 DeTR 中使用的,此模型可以作為在未知資料上快速迭代並找到此任務基準的一種方法。

誌謝

我感謝 Aritra Roy Gosthipaty、Ayush Thakur 和 Ritwik Raha 對該範例進行初步審查。我也感謝 Google 開發者專家計畫。